Data Analysis BLOG - description

This blog is created by Barry The Analyst, data analyst, software developer & owner of this web site. The articles contain description of various data analysis platform features with many practical examples. Main Home blog page contains following article types: Data Analysis in general, Excel, SQL/DB, Power BI, Python, JavaScript and Playwright. There are also Portfolio projects that demonstrates Data Analysis features with real Datasets. Gadgets on the right side contain article index (archive) grouped by article type, as well as some additional notes on different topics related to data analysis & software development

The page Portfolio contains portfolio projects on real Datasets using tools & technologies described in articles of this blog.

Table of Contents

2022

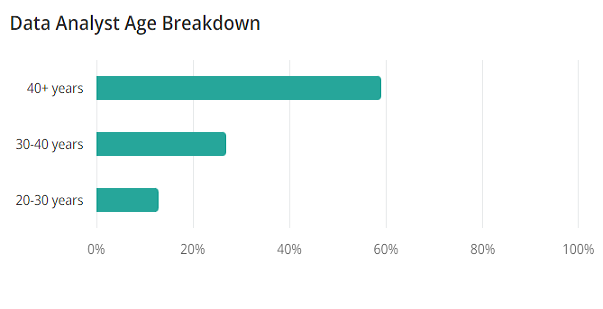

![]() 1. Data Analyst at 47 - to be or not to be?

(May)

1. Data Analyst at 47 - to be or not to be?

(May)

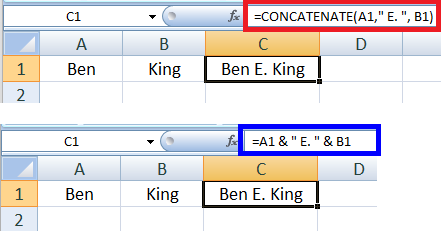

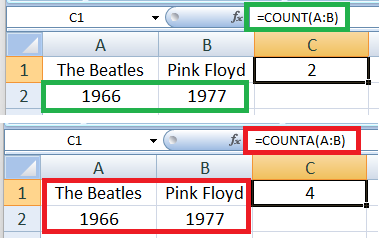

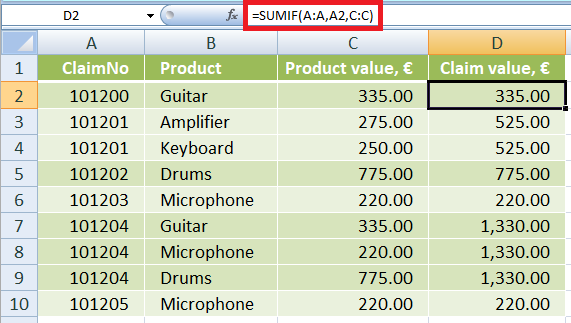

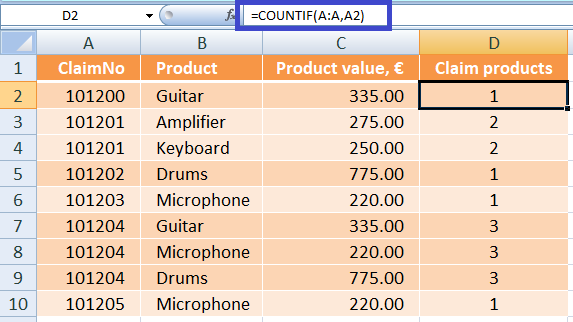

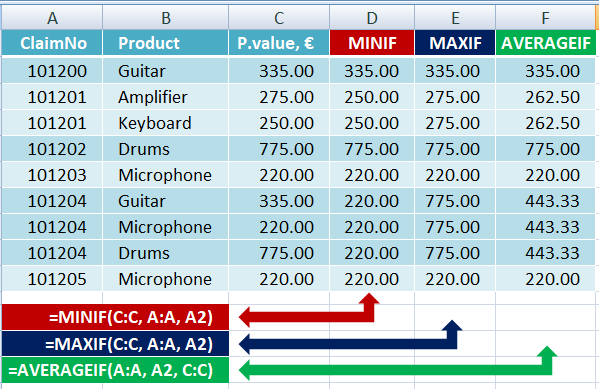

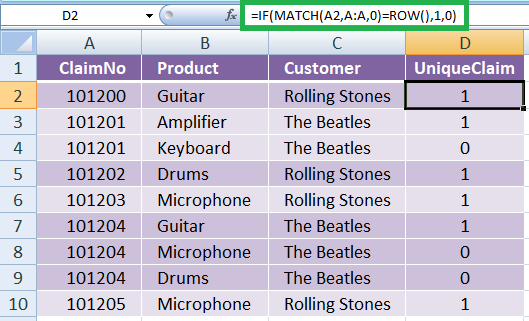

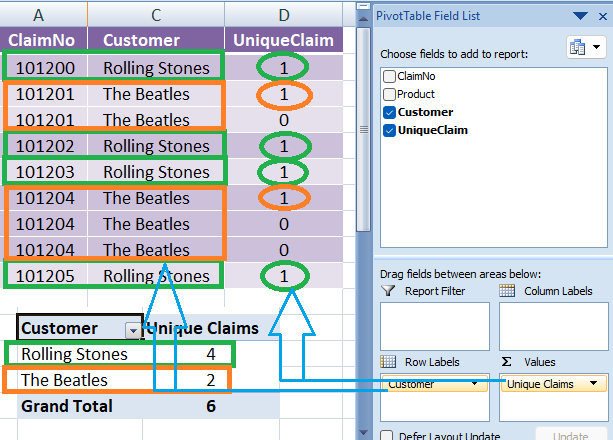

2. Core Excel functions for Data Analysis

(June)

![]()

![]() 3. Database fundamentals

(July)

3. Database fundamentals

(July)

4. Built-in data structures in Python

(August)

![]()

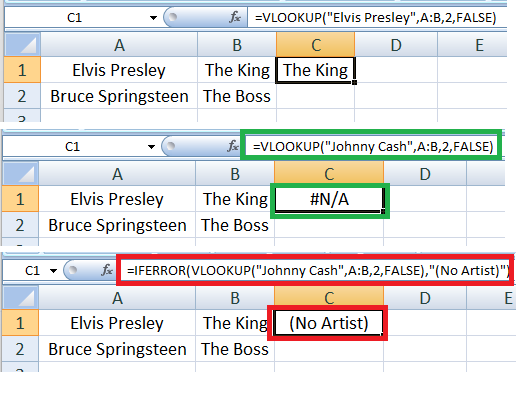

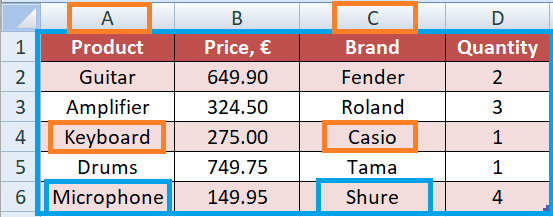

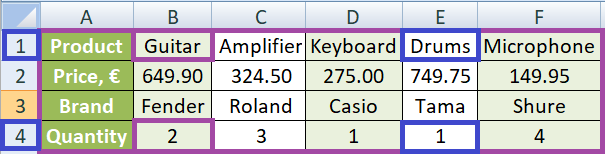

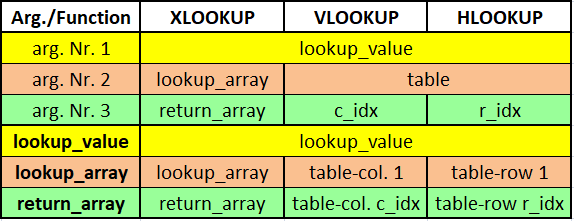

![]() 5. LOOKUP function family

(September)

5. LOOKUP function family

(September)

6. SQL essential features

(October) ![]()

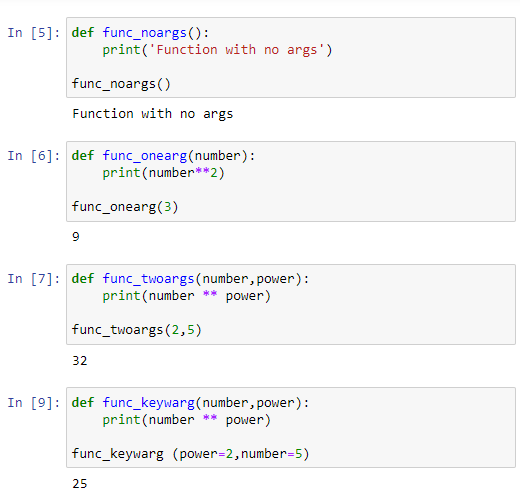

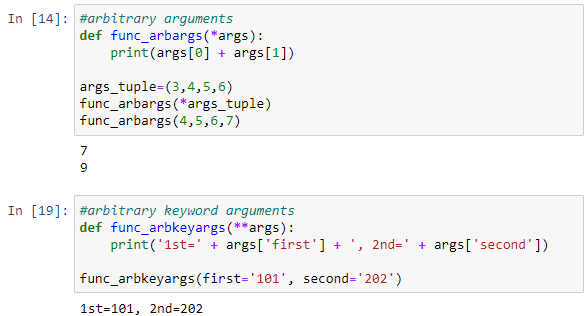

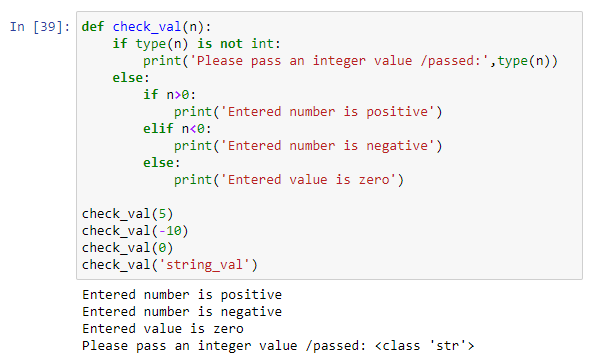

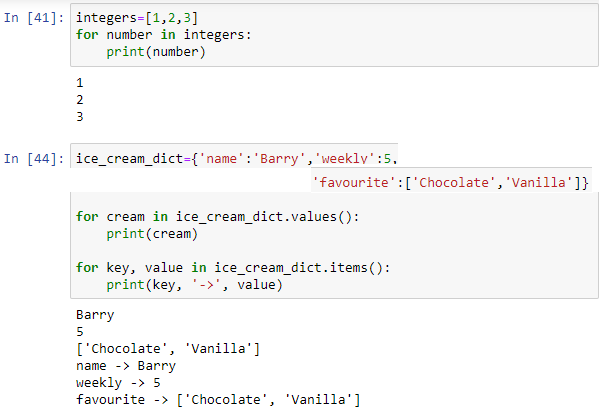

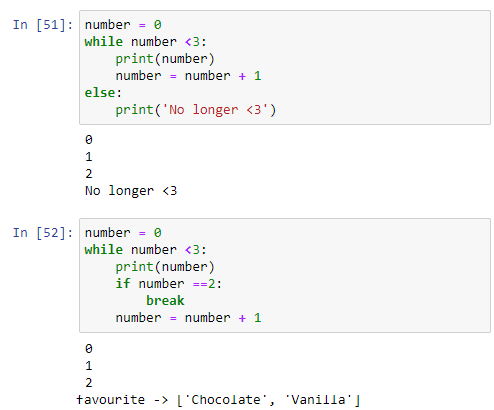

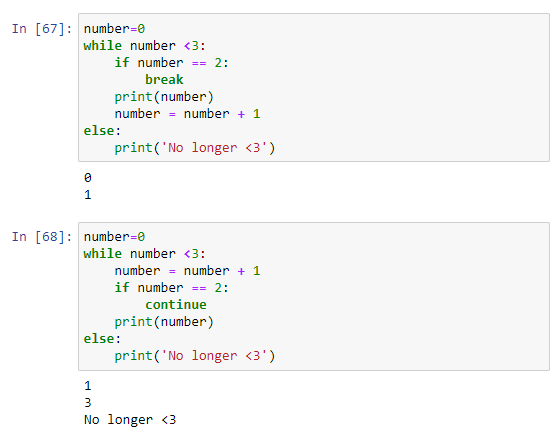

![]() 7. Branching, loops & functions

(November)

7. Branching, loops & functions

(November)

8. Data Analysis roles & goals

(December) ![]()

2023

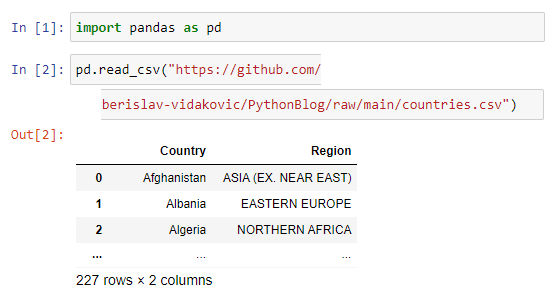

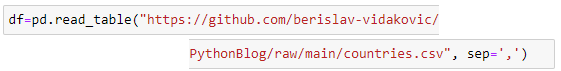

![]() 9. Python libraries - pandas

(January)

9. Python libraries - pandas

(January)

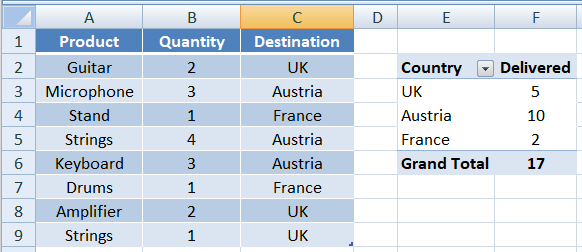

10. Using SQL for creating Pivot tables

(February) ![]()

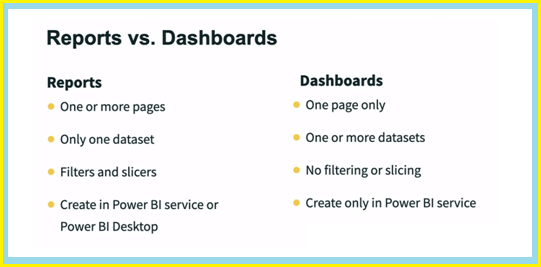

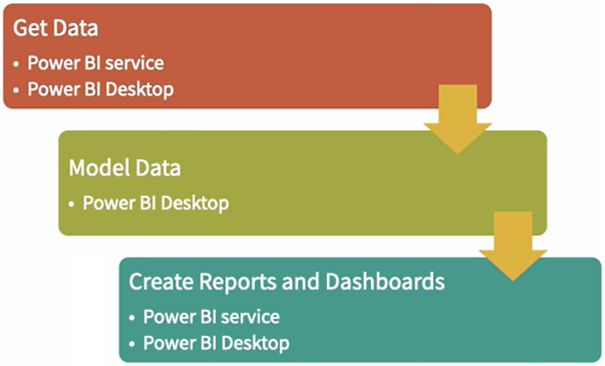

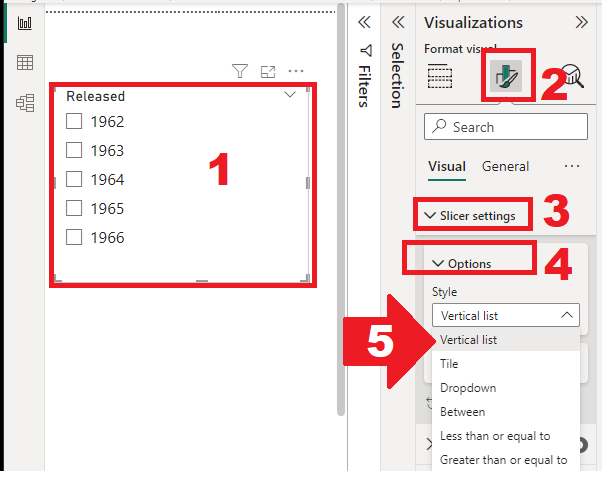

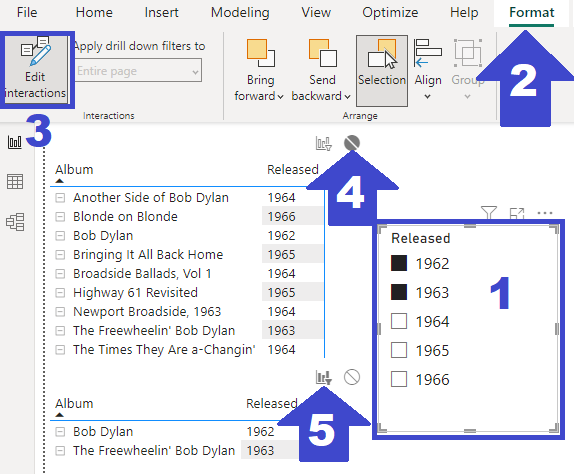

![]() 11. Power BI basics

(March)

11. Power BI basics

(March)

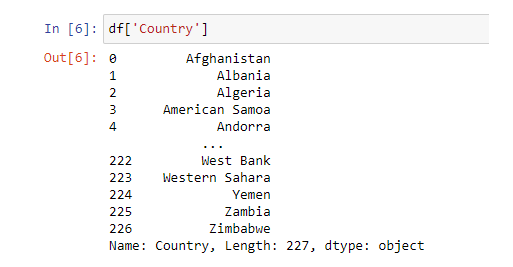

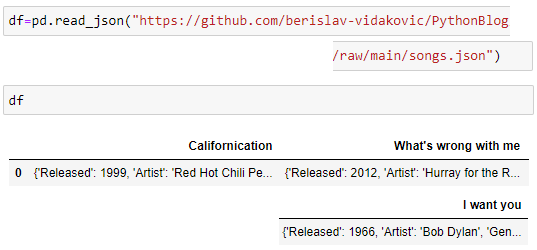

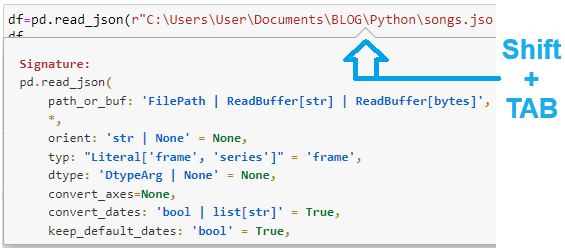

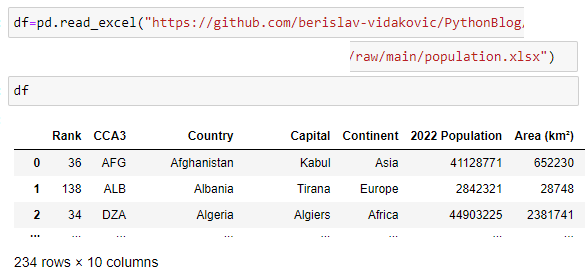

12. Reading files (csv, txt, json, xlsx)

(April)

![]()

![]() 13. Excel functions, tips & tricks

(May)

13. Excel functions, tips & tricks

(May)

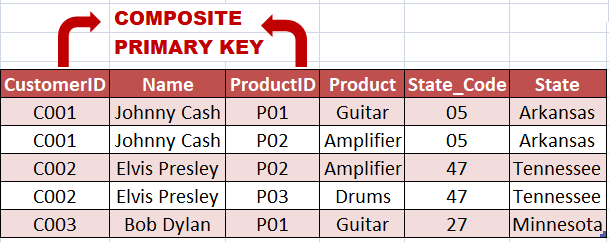

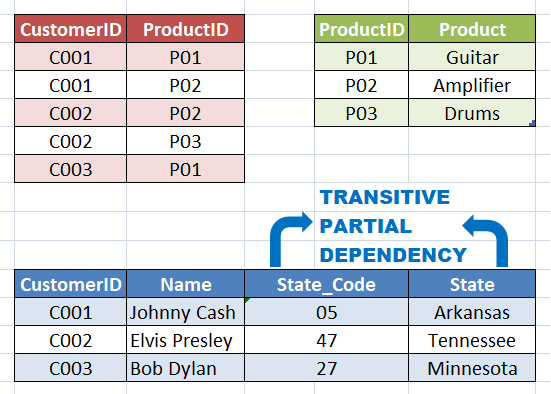

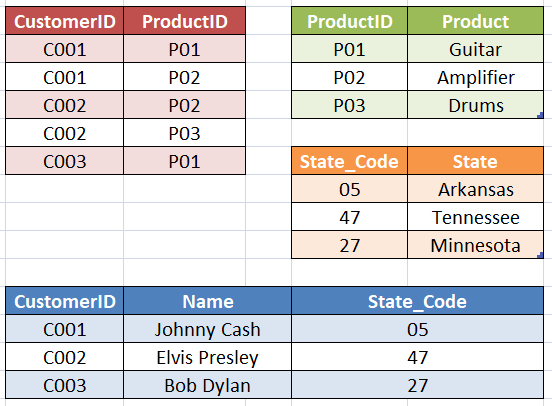

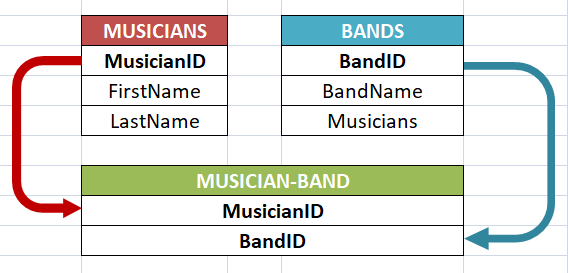

14. Database normalization - 1NF, 2NF and 3NF

(June)

![]()

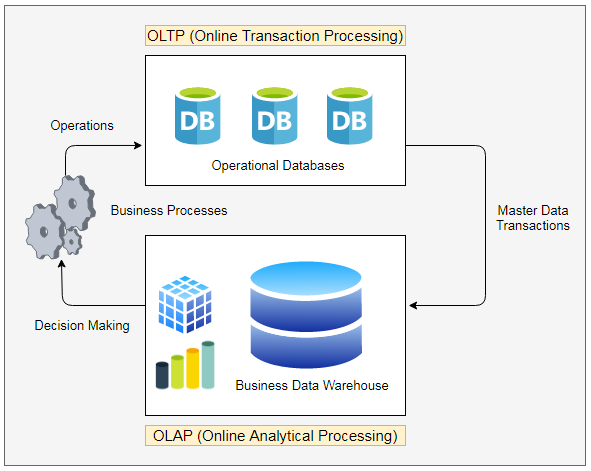

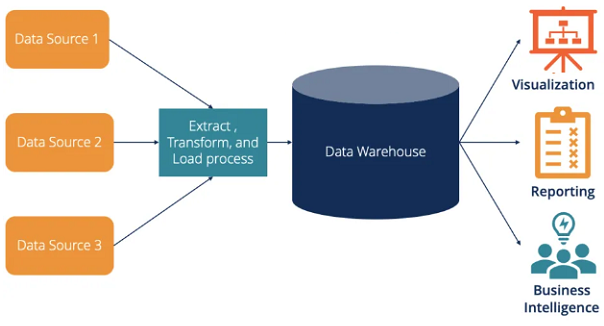

![]() 15. Data Warehouse & Data Analysis

(July)

15. Data Warehouse & Data Analysis

(July)

16. Data connection modes in Power BI

(August) ![]()

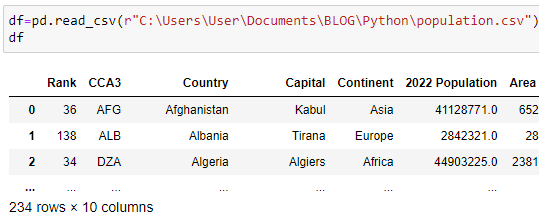

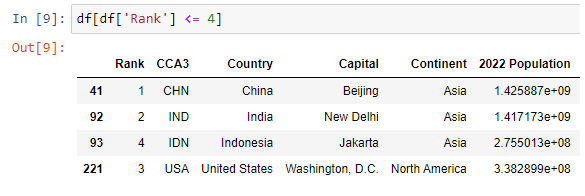

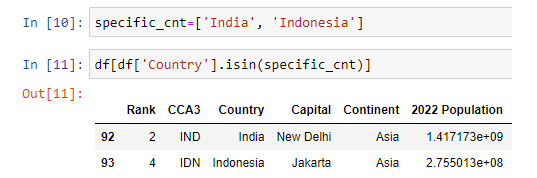

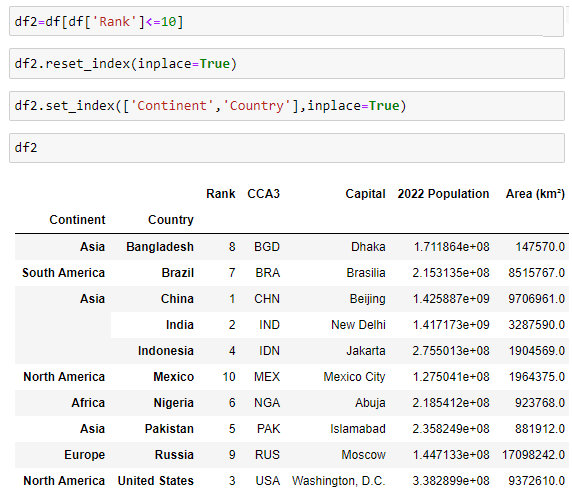

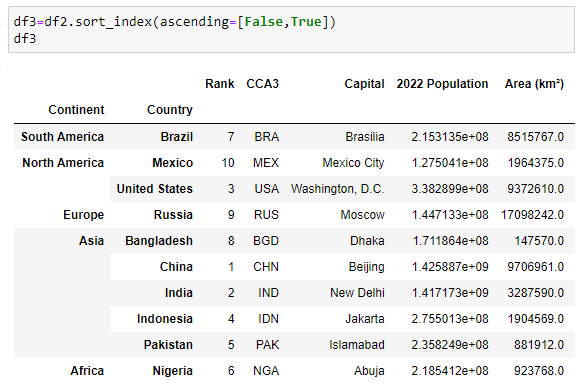

![]() 17. Filtering, sorting & indexing data

(September)

17. Filtering, sorting & indexing data

(September)

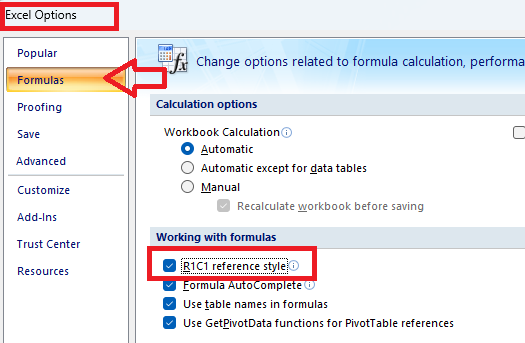

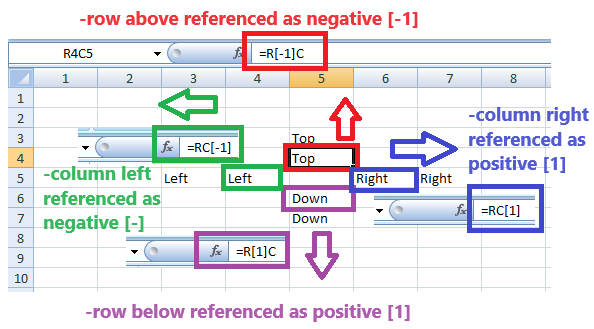

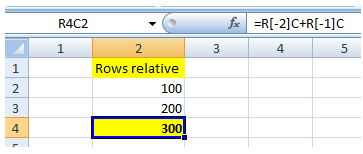

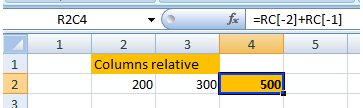

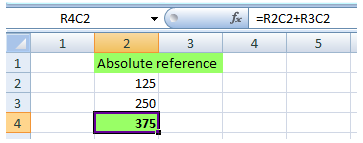

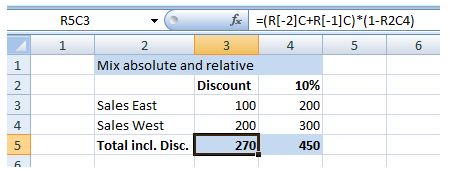

18. R1C1 notation & German conversion map

(October)

![]()

![]() 19. Specifying rules using CONSTRAINTs

(November)

19. Specifying rules using CONSTRAINTs

(November)

20. Is Data Analysis a Dying Career?

(December)

![]()

2024

![]() 21. Exploring pandas Series

(January)

21. Exploring pandas Series

(January)

22. Creating Pivot tables in Python

(February)

![]()

![]() 23. Lambda functions and map()

(March)

23. Lambda functions and map()

(March)

24. Playwright basic features

(April)

![]()

![]() 25. Pytest & Playwright Web Automation Testing

(May)

25. Pytest & Playwright Web Automation Testing

(May)

26. Playwright Test Automation with JS (1)

(June)

![]()

![]() 27. JavaScript Basics (1)

(July)

27. JavaScript Basics (1)

(July)

28. Playwright Test Automation with JS (2)

(August)

![]()

![]() 29. Playwright Test Automation with JS (3)

(September)

29. Playwright Test Automation with JS (3)

(September)

30. JavaScript Basics (2)

(October)

![]()

![]() 31. JavaScript Intermediate

(November)

31. JavaScript Intermediate

(November)

32. Automated Test Containerization

(December)

![]()

2025

![]() 33. JavaScript Intermediate (2)

(January)

33. JavaScript Intermediate (2)

(January)

34. JavaScript in Test Automation and beyond

(February)

![]()

![]() 35. NoSQL Database overview

(March)

35. NoSQL Database overview

(March)

Read more on web development...

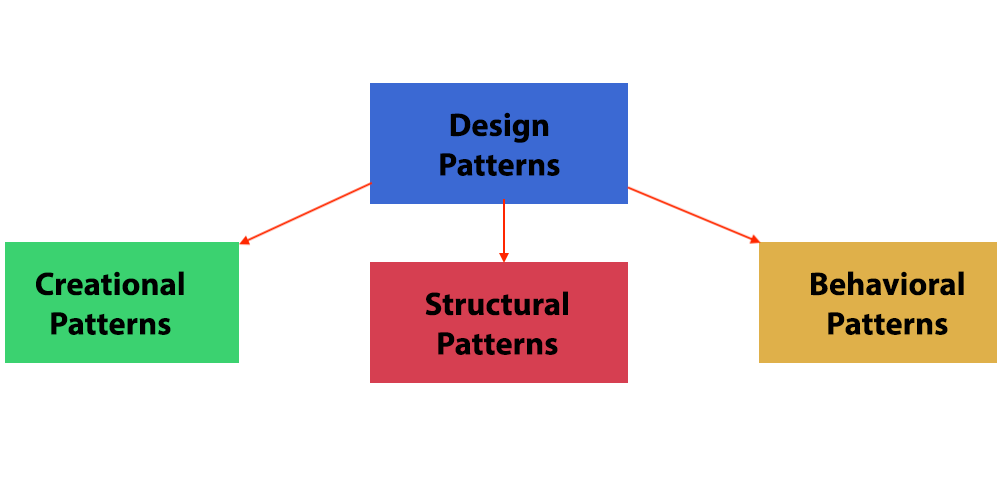

NoSQL Database overview

Sunday, 09.03.2025

NoSQL databases started gaining widespread acceptance around the mid-to-late 2000s, with their growth really accelerating during the 2010s. The term "NoSQL" was first coined by Carl Strozzi in 1998 for a relational database that didn’t use SQL, but the term didn't really take off until the mid-2000s. The rise of web applications with high traffic and the need for scalability beyond traditional relational databases (RDBMS) highlighted the limitations of SQL-based systems, particularly with horizontal scaling (across multiple machines). The inspiration for many NoSQL databases was Google's Bigtable paper published 2007, outlining a distributed storage system designed to scale horizontally.

Mainstream Adoption started on 2012 - The term NoSQL became widely recognized, and the technology started being used in production systems for major companies like Facebook, Twitter, Netflix, and LinkedIn.

By the late 2010s, NoSQL databases were considered standard solutions for specific use cases:

Today, many organizations use NoSQL in hybrid architectures alongside traditional relational databases, selecting the best database for each use case.

Key Factors Contributing to Widespread Acceptance:

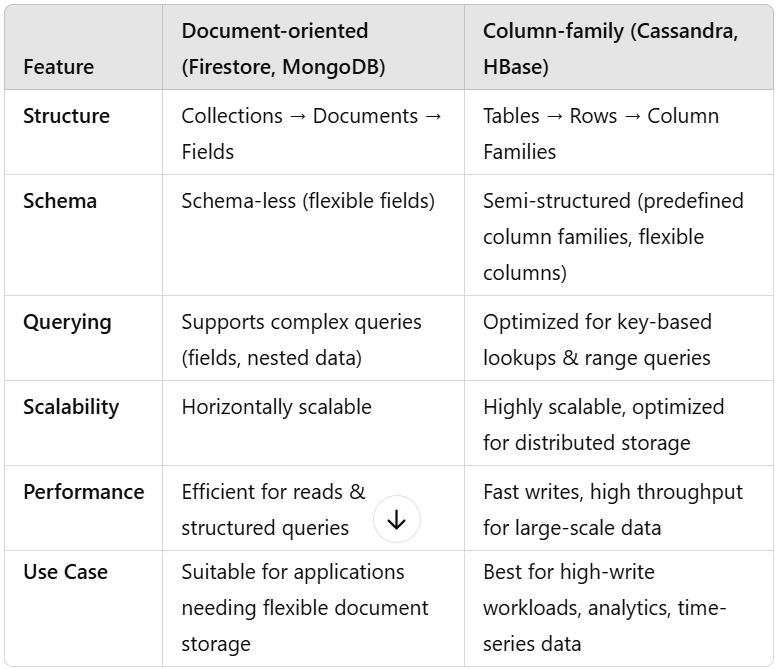

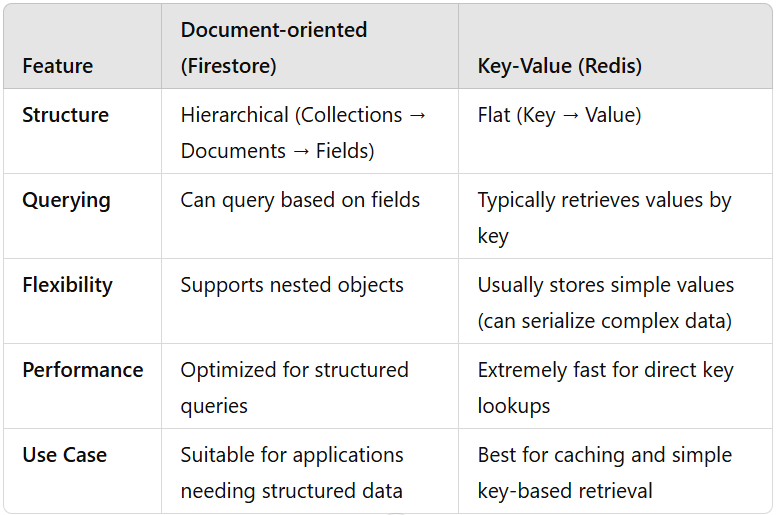

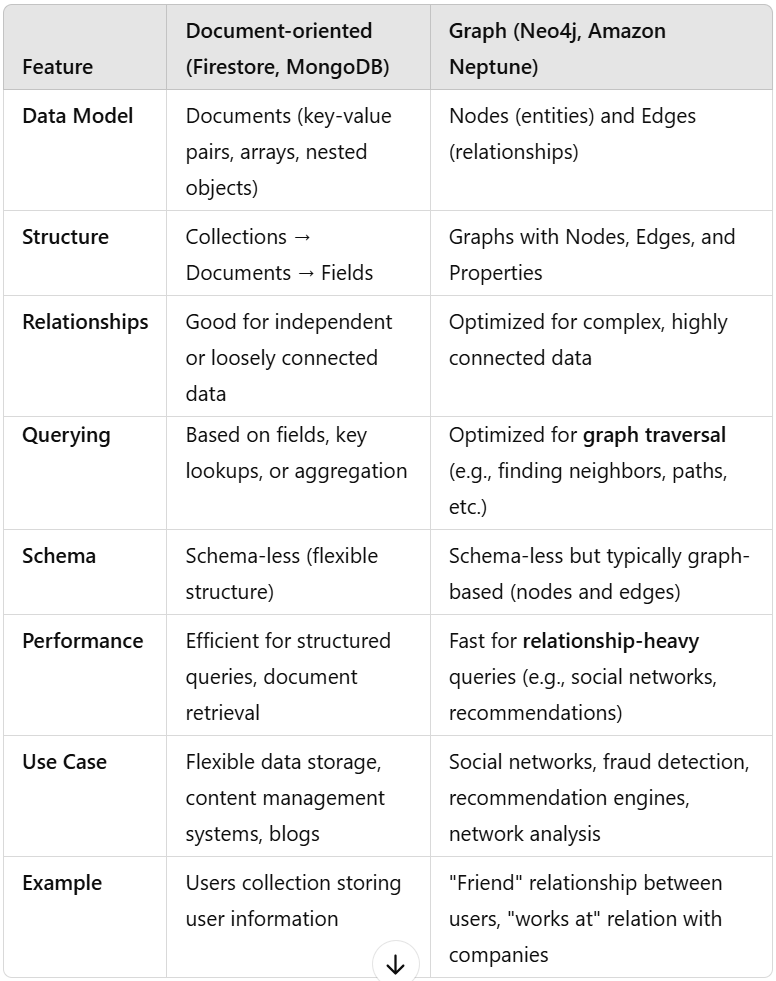

Document-type NoSQL Databases

Data is stored as documents (usually in JSON or BSON format).

Documents are grouped into collections.

Each document contains fields, which can be key-value pairs, arrays, or nested objects.

Schema-less (documents can have different structures).

Suitable for loosely connected or independent data but not optimized for complex relationships.

Primarily used for document retrieval via key lookups or more advanced queries based on fields.

Data is stored as documents (usually in JSON or BSON format).

Documents are grouped into collections.

Each document contains fields, which can be key-value pairs, arrays, or nested objects.

Schema-less (documents can have different structures).

Suitable for loosely connected or independent data but not optimized for complex relationships.

Primarily used for document retrieval via key lookups or more advanced queries based on fields.

Use cases of Document-type DB

- You need flexible schemas.

- Your data has complex, nested relationships.

- You perform frequent read queries on specific fields.

- Your data is relatively independent and doesn’t have complex relationships

Column-family type NoSQL Databases

Data is stored in tables with rows and columns, but unlike relational databases

Columns are grouped into column families,

Each row can have a variable number of columns and

Data is retrieved by row keys. Optimized for high-speed writes and distributed data.

Data is stored in tables with rows and columns, but unlike relational databases

Columns are grouped into column families,

Each row can have a variable number of columns and

Data is retrieved by row keys. Optimized for high-speed writes and distributed data.

Use cases of Column-family DB

- You handle large-scale, high-write operations (e.g., logs, time-series).

- You need fast retrieval with predictable access patterns.

- You work with distributed databases requiring high availability.

Key-value type NoSQL Databases

Data is stored as a simple key-value pair.

Keys are unique identifiers.

Values can be strings, lists, sets, or even entire serialized objects.

No hierarchy like collections and documents.

Data is stored as a simple key-value pair.

Keys are unique identifiers.

Values can be strings, lists, sets, or even entire serialized objects.

No hierarchy like collections and documents.

Use cases of Key-value DB

- You need ultra-fast reads and writes for simple key-based lookups (e.g., caching, session storage).

- Your data is mostly independent and doesn’t require complex queries or relationships.

- You require high scalability for applications handling millions of read/write operations per second.

- Your use case involves real-time processing, such as leaderboards, API rate limiting, or IoT sensor data.

- You need temporary storage with auto-expiry, like feature flags, shopping carts, or ephemeral sessions.

Graph-type NoSQL Databases

Data is represented as nodes (entities), edges (relationships between entities), and properties (information about nodes or edges).

Highly optimized for storing and traversing relationships. Nodes are linked by edges. Schema-less but typically follows a graph structure with nodes and relationships. Highly optimized for traversing and querying relationships (e.g., finding shortest paths, recommendations, etc.). Efficient querying of complex, interconnected data through graph traversal (e.g., using Cypher in Neo4j).

Data is represented as nodes (entities), edges (relationships between entities), and properties (information about nodes or edges).

Highly optimized for storing and traversing relationships. Nodes are linked by edges. Schema-less but typically follows a graph structure with nodes and relationships. Highly optimized for traversing and querying relationships (e.g., finding shortest paths, recommendations, etc.). Efficient querying of complex, interconnected data through graph traversal (e.g., using Cypher in Neo4j).

Use cases of Graph DB

- Your application involves complex relationships and you need to frequently traverse these relationships (e.g., recommendations, social networks, or fraud detection).

- You need to efficiently query the connections between entities and find patterns (e.g., shortest path, connected components).

- Your data’s value comes from its interconnectedness rather than individual data points.

JavaScript in Test Automation and beyond

Sunday, 16.02.2025

A test runner with Jest-like assertions @playwright/test is developed and maintained by the Playwright team that is built on top of the Playwright API. This test runner is tightly integrated with Playwright and is specifically designed for end-to-end testing. It has capabilities like browser-specific tests, parallel test execution, rich browser context options, snapshot testing, automatic retries and many more.

There are two ways of handling new page opened in child window (using link with target="_blank"):

const [newPage] = await Promise.all(

[ context.waitForEvent('page'),//page is Event name

docLink.click(), ] )

const pagePromise = context.waitForEvent('page'); //no await!

await docLink.click();

const newPage = await pagePromise;

In order to create a button in HTML and provide interaction using JavaScript there are following steps to be implemented:

<head>

<script type="text/javascript" src="path/scp.js"></script>

</head>

<body>

<button id="btnID">Click me...</button>

</body>function clickRun() {

console.log("Thank you for your click!");

}

// Attach event listeners

document.addEventListener("DOMContentLoaded", function () {

const elementBtn = document.getElementById("btnID");

elementBtn.addEventListener("click", clickRun);

});

The first parameter of addEventListener is key of DocumentEventMap interface

The following code performs file upload using File open Dialog:

function loadFile() {

// Create an invisible file input element

const fileInput = document.createElement("input");

fileInput.type = "file";

fileInput.click(); //(1) Opens the file picker dialog

// (2) Fired change event ...

fileInput.addEventListener("change", function () { //(3)

if (fileInput.files.length > 0) {

const file =fileInput.files[0];

console.log("Selected file:", file.name);

// Read file content

const reader = new FileReader();

reader.onload = function (event) { //(6)

let fileContent = event.target.result;

console.log("File Content:\n", fileContent);

};

reader.readAsText(file); // (4)...(5) Fired onload

}

});

}There is workflow explained:

It is common and safe practice in JavaScript to define event handlers before triggering events. The consistent event-driven pattern -to define what happens before starting the action- ensures that when the file reading is finished, the handler is attached. If event handler was defined after file reading action, there is a tiny posibility for event to be lost. Is the event-driven pattern consistency ruined by calling fileInput.click() before event listener for change event? The pattern is still intact because the "change" event listener is set up before the event can possibly be fired. There is no race condition since user action is required.

Accumulator pattern

When appending HTML content using innerHTML it has to be used accumulator pattern. Every time innerHTML is set, the HTML has to be parsed, a DOM constructed, and inserted into the document. When writing to the DOM it can be created whole elements only, and they are placed to the DOM at once.

let htmlContent = "openTag";

htmlContent += "elementContent";

htmlContent += "closeTag";

element.innerHTML += htmlContent;Function with variable number of parameters

The ...rest syntax collects all remaining arguments into an array

function f(param1, ...rest){

console.log("rest:", rest);

}

f(1,2,3,4); //Output: rest: [2,3,4]

JavaScript Intermediate (2)

Sunday, 26.01.2025

There are 3 layers of standard web technologies: HTML, CSS and JavaScript. HTML is the markup language that we use to structure to the web content. CSS is a language of style rules that we use to apply styling to the HTML content, to look more beautiful. JavaScript is a scripting language that enables the web page to be interactive.

Use for...in loop to iterate throug JSON object. When applied to array, it will get array index for each element. Using for...of loop wil iterate values in array.

const jsonOb = {"key1" : 111, "key2": 222};

const arrX = ["first", "sec", "third"];

for( let key in jsonOb )

console.log(key, jsonOb[key]); //key1 111 key2 222

for( let key in arrX )

console.log(key); // 0,1,2

for( let value of arrX )

console.log(value); //first sec third

To connect to MS SQL Server database from Node.js, perform query and run SQL script, the first step is to install mssql. After that import the needed objects setup configuration of database connection:

const sql = require('mssql');

const fs = require('fs');

const path = require('path');

const config = { // Configuration for DB connection

user: 'sa',

password: 'myPwd',

server: 'remote-server', // You can use 'localhost\\instance' to connect to named instance

database: 'MY_DB',

options: { encrypt: false, // Use true if your server supports encryption

trustServerCertificate: true } };// Change to true for local dev / self-signed certs

Connection is established using sql.connect() method, that returns sql.ConnectionPool object. Running query or SQL script is done using pool.request().query():

async function getData() {

try {

let pool = await sql.connect(config);

let res = await pool.request().query(`SELECT * FROM MY_T`);

const scriptPath = path.join(__dirname, 'script.sql');

const script = fs.readFileSync(scriptPath, 'utf8');

res = await pool.request().query(script);

console.log('Script executed successfully:', res);

return res.recordset; }

catch (err) { console.error('SQL error', err); }

finally { sql.close();} }

await getData().then(data => {

console.log('Data retrieved:', data);

}).catch(err => { console.error('Data Error:', err); });

For loading data from CSV file and parameterizing query to get file path it is used dynamic SQL. When DB table needs to be either updated or new record inserted, it is used MERGE statement to ensure the right action:

For loading data from CSV file and parameterizing query to get file path it is used dynamic SQL. When DB table needs to be either updated or new record inserted, it is used MERGE statement to ensure the right action:

DECLARE @FilePath VARCHAR(255); SET @FilePath = 'C:\Path\To\File\DataSrc.csv'; DROP TABLE IF EXISTS #tmpTable; CREATE TABLE #tmpTable(a nvarchar(5) not null, b nvarchar(6) not null); DECLARE @BulkInsertSQL NVARCHAR(500); SET @BulkInsertSQL = N' BULK INSERT #tmpTable FROM ''' + @FilePath + N''' WITH ( FIRSTROW = 2, FIELDTERMINATOR = '';'', ROWTERMINATOR = ''\n'', CODEPAGE = ''65001'', -- Specifies UTF-8 encoding DATAFILETYPE = ''widechar'' );'; -- Unicode (NVARCHAR) EXEC sp_executesql @BulkInsertSQL;

MERGE INTO TABLE_DEST AS Dest USING #tmpTable AS Source ON Dest.Field1 = Source.Field1s WHEN MATCHED THEN UPDATE SET Dest.Field2=Source.Field2s, Dest.Field3=Source.Field3s WHEN NOT MATCHED BY TARGET THEN INSERT(ID, Field2, Field3) VALUES(LOWER(NEWID()), Source.Field2s, Source.Field3s);

To generate unique string based on current datetime with granulation of 1 minute and length up to 4 characters for next 14 years and up to 5 characters for next more than 200 years, it is cool to use Base 62:

const BASE62_CHARS = '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz';

function toBase62(num) {

if (num === 0) return '0';

let str = '';

while (num > 0) {

const remainder = num % 62;

str = BASE62_CHARS[remainder] + str;

num = Math.floor(num / 62); }

return str; }

export function generateStrUnique(){

const datetimenow = new Date();

const year = datetimenow.getFullYear()-2025;

const month = datetimenow.getMonth();

const day = datetimenow.getDate();

const hour = datetimenow.getHours();

const minute = datetimenow.getMinutes();

let valueNum = (year << 20) | (month << 16) | (day << 11) | (hour << 6) | minute;

return String(toBase62(valueNum)); }

File system I/O operations: use path.dirname to extract directory path from the file path. To ensure that directory exists and to create new one if it doesn't use fs.mkdirSync. Use fs.existsSync to check if file exists, and fs.unlinkSync to delete it if it does. To append line into the file use fs.appendFileSync and to read file content use fs.readFileSync. In order to completely rewrite the file content, use fs.writeFileSync.

const fs = require('fs');

const path = require('path');

const dirPath = path.dirname(strFilePath);

fs.mkdirSync(dirPath, { recursive: true });

if (fs.existsSync(strFilePath))

fs.unlinkSync(strFilePath);

fs.appendFileSync(strFilePath, htmlContent, 'utf8');

const htmlFile = fs.readFileSync(strFilePath, 'utf-8');

fs.writeFileSync(strFilePath, htmlContent, 'utf-8');

DOM manipulation methods are inherently browser-based and aren't directly available when working with Node.js on the server side. When using fs.readFileSync to read an HTML file into a string variable in Node.js, it's dealing with plain text without a DOM to interact with. To achieve DOM-like manipulation on the server side, there is JSDOM - a popular library that simulates a subset of browser APIs, including the DOM, within Node.js. This allows you to manipulate HTML documents as if you were in a browser environment. In order to update particular HTML section defined by ID the code follows:

const htmlContent = fs.readFileSync(filePath, 'utf-8');

// Initialize JSDOM with the HTML content

const dom = new JSDOM(htmlContent);

// Access the document

const document = dom.window.document;

// Use getElementById to find the target div

const myDiv = document.getElementById(idSection);

myDiv.innerHTML += "New line to add";

// Serialize the updated HTML back to a string

const updatedHTML = dom.serialize();

// Write the updated HTML back to the file

fs.writeFileSync(filePath, updatedHTML, 'utf-8');

Automated Test Containerization

Sunday, 01.12.2024

Docker is an open platform for developing, shipping, and running applications. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker's methodologies for shipping, testing, and deploying code, you can significantly reduce the delay between writing code and running it in production.

Docker provides the ability to package and run an application in a loosely isolated environment called a container.

The isolation and security lets you run many containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so you don't need to rely on what's installed on the host. You can share containers while you work, and be sure that everyone you share with gets the same container that works in the same way.

The isolation and security lets you run many containers simultaneously on a given host. Containers are lightweight and contain everything needed to run the application, so you don't need to rely on what's installed on the host. You can share containers while you work, and be sure that everyone you share with gets the same container that works in the same way.

To setup automation test development environment:

------------------------------------------

1. Install VSCode and git

2. Install Node.js: from https://nodejs.org/en. This is essential as Playwright is a Node.js library.

-Add to Path NODE_HOME="C:\Program Files\nodejs"

-To check if it is installed run: npm --version

3. Playwright Framework: Install Playwright using npm

-To check if it is installed run: npx playwright --version

-To check if installed globally run: npm config get prefix and check for Playwright files and/or folders in node_modules

-To clear cache if previous playwright remained: npx clear-npx-cache

-To clear cache from npm: npm cache clean --force

Run: npm init playwright if starting new Playwright project

-Select JavaScript, folder to put end-to-end test

-Select to Install Playwright browsers or manually via "npx playwright install"

-This command initializes a new Playwright testing project in the current directory

-It creates all the necessary configuration files and sets up the folder structure for Playwright tests

-It installs @playwright/test and other necessary dependencies

-It creates playwright.config.js (configuration for the Playwright project) and example tests in the tests folder

-It keeps environment clean - all dependencies are installed locally (not globally) keeping the project isolated

3-A If you already have a Node.js project and just want to add Playwright for testing, use npm install @playwright/test

4. Create the first test

-In PowerShell create new spec.js file:

New-Item -Path ./tests -Name "first-test.spec.js" -ItemType "File"

-Skeleton of the first-test.spec.js file is as follows:

const { test, expect } = require('@playwright/test');

test('Navigate to a webpage', async ({ browser }) => {

// Create a new browser context and page

const context = await browser.newContext();

const page = await context.newPage();

// Navigate to your desired URL

await page.goto('https://www.google.com');

// Keep the browser open for 3 seconds (optional)

await page.waitForTimeout(3000);

// Close the context

await context.close();

});

5. Select browser to use, for Edge ensure there is only the following item in the projects array within playwright.config.js:

/* Configure projects for major browsers */

projects: [ { name: 'Microsoft Edge',

use: { ...devices['Desktop Edge'], channel: 'msedge'}, }, ],

6. Create folder for JS files, create first module and Hello World function. In order to call this function from spec file, it has to be exported from JS file and imported in spec file

// module01.js

function helloWorld() {

console.log("Hello world!"); }

module.exports = { helloWorld };

// first-test.spec.js

const { helloWorld } = require('../utils/module01');

7. Run the test using command

npx playwright test first-test.spec.js --headed

-In PowerShell if it doesn't execute, run the following

Set-ExecutionPolicy RemoteSigned -Scope Process

To containerize project using Docker:

------------------------------------------

1. Install Docker Desktop

-Ensure Docker is installed: docker --version

2. Add Dockerfile to project root and test locally:

-Create a Docker image by running: docker build -t my-app-name .

-Run Docker image by command: docker run --rm my-app-name

-Run interactively by: docker run -it my-app-name /bin/bash

3. Add Dockerfile to root project folder

-This file describes how to package your app into a container. This setup ensures your app runs entirely within the container, independent of the host machine.

FROM command:

-When using the Playwright Docker image (mcr.microsoft.com/playwright), the necessary browsers are preinstalled in the container and there is no need to install them manually

RUN command:

-There is no need to add a separate installation step for Playwright in the Dockerfile, if the devDependencies in the package.json include @playwright/test, which is Playwright's official testing library. As long as @playwright/test is listed in package.json, npm install ensures Playwright is installed in the container

-When RUN npm install executes, it will install all the dependencies listed in the devDependencies section of the package.json, including Playwright.

# Use Playwright's official Docker image with browsers FROM mcr.microsoft.com/playwright:v1.36.0-focal # Set working directory in the container WORKDIR /app # Copy project files into the container COPY . . # Install project dependencies RUN npm install # Expose a port if needed (optional, for web-based apps) # EXPOSE 3000 # Default command to run all tests (or runtime user-defined) CMD ["npx", "playwright", "test"] # Default command to run specific test script #CMD ["npm", "run", "create-item"]

4. Add a docker-compose.yml in the root of your project, to simplify running the container.

version: '3.8' services: playwright-app: build: context: . image: playwright-app container_name: my-playwright-container volumes: - .:/app stdin_open: true tty: true

5. Test locally: docker compose up --build

To setup automation test PRODUCTION environment:

------------------------------------------

1. Node.js: This is essential as Playwright is a Node.js library.

To check if it is installed run: npm --version

2. Playwright Framework: They need to install Playwright using npm.

To check if it is installed run: npx playwright --version

3. Your Code: Provide all the necessary scripts and files.

4. VSCode (Optional): If they need to edit or debug the code.

5. Run: npm init playwright

6. Run: npm install playwright

7. Run: npm install @playwright/test

8. Configure Playwright: Set up the Playwright configuration file.

9. Run Tests: Use the appropriate npm scripts to execute the tests.

Besides a.m. core dependencies, if your project uses additional libraries or tools, make sure to include them in your package.json file.

6. Client step 1 Install Docker Desktop on their Windows machine

7. Client step 2 Obtain your Docker image

You have two options:

7.1. Share the Docker image:

---Push it to Docker Hub or another registry:

docker tag playwright-app your-dockerhub-username/playwright-app

docker push your-dockerhub-username/playwright-app

---Client can pull it:

docker pull your-dockerhub-username/playwright-app

docker run --rm your-dockerhub-username/playwright-app

7.2. Share your project folder:

---Your client can clone/download it, then run:

docker compose up --build

8. Client step 3 Simplify running the app:

---Create a Shortcut: Use a .bat file for Windows to run the container with a click:

@echo off

docker compose up --build

pause

Save this file as run-tests.bat in the project folder

#run ALL tests: docker run my-playwright-container #run SPECIFIC test: docker run my-playwright-container npx playwright test CreateItem.spec docker run my-playwright-container npm run switch-item

JavaScript Intermediate

Sunday, 04.11.2024

There are 3 layers of standard web technologies: HTML, CSS and JavaScript. HTML is the markup language that we use to structure to the web content. CSS is a language of style rules that we use to apply styling to the HTML content, to look more beautiful. JavaScript is a scripting language that enables the web page to be interactive.

Iterating through array using forEach. If need to use break, it's better to use regular for loop.

let arrTest = [1,2,4];

arrTest.forEach(function(element,index){ //can take 2 params

console.log(element, index) });

arrTest.forEach((el,index) => { console.log(el, index) });

A shorter way to write function is Arrow function. It is easier to read, but does not enable hoisting as regular functions do, which stands for possibilty to call a function before it's definition.

const regularFunction = function () { console.log('Rf'); }

const arrowFunction = () => { console.log('Af'); }

If Arrow function has only 1 parameter, parentheses are not needed

const oneParam = (param) => { console.log(param+1); };

const oneParam = param => { console.log(param+1); };

oneParam(2); // output writes 3

If Arrow function has only 1 line, return and {} are not needed

const oneLine = () => { return 2 + 3; };

const oneLine = () => 2 + 3;

console.log(oneLine()); // output writes 5

Built-in function setTimeout is a practical example of callback function which means passing a function to another function as parameter.

setTimeout(function() {

console.log("function called in the future") }, 3000);

console.log("asyncronously line after but called first");

// Implementing delay

await new Promise((resolve) => setTimeout(resolve, 5000));

Built-in function setInterval runs periodically. There is example with running it each 1s within interval of 5s

let isRunning = false;

let hasToRun = false; // initially on page load

let intervalId;

function resetInterval() {

hasToRun = !hasToRun;

if (hasToRun && !isRunning) {

intervalId = setInterval(function () { // Start

console.log("Running interval ...");

}, 1000);

isRunning = true;

} else if (!hasToRun && isRunning) { // Stop the interval

clearInterval(intervalId);

isRunning = false; }

}

resetInterval(); // Toggle on

await new Promise(resolve => setTimeout(resolve, 5000));

resetInterval(); // Toggle off

To get time interval between 2 Date objects in proper format:

function getDuration( dtStart, dtEnd ) {

const ms = dtEnd - dtStart;

const seconds = Math.floor(ms / 1000);

const minutes = Math.floor(ms / (1000 * 60) );

const hours = Math.floor(ms / (1000 * 60 * 60));

let duration = String(ms % 1000) + 'ms';

if( seconds > 0 )

duration = (String(seconds % 60)) + 's ' + duration;

if( minutes > 0 )

duration = (String(minutes % 60)) + 'min ' + duration;

if( hours > 0 )

duration = (String(hours)) + 'h' + duration;

return duration; } // 1h 23min 33s 129ms

To update value for particular key in JSON file:

updateJSONfile(key, value)

{

const fs = require('fs');

const filePath = './path/file.json';

try {

// Read the existing file

const fileContent = fs.readFileSync(filePath, 'utf-8');

const data = JSON.parse(fileContent);

data[key] = value; // Modify the data

fs.writeFileSync(filePath, // Write updated data back

JSON.stringify(data, null, 2), 'utf-8');

} catch (err) {

console.error('[***Error handling JSON file]', err);

}

}

JavaScript Basics (2)

Sunday, 06.10.2024

There are 3 layers of standard web technologies: HTML, CSS and JavaScript. HTML is the markup language that we use to structure to the web content. CSS is a language of style rules that we use to apply styling to the HTML content, to look more beautiful. JavaScript is a scripting language that enables the web page to be interactive.

Truthy and falsy values are supersets of true and false. String can be defined with '-' or "-" or backticks `-` (template strings) which is the most flexible option, that allows to pass variable and to create multiline string as well. There are 3 shortcuts for if statement:

if( !value ) //check for false, 0, '', NaN, undefined, null

let a = 5

const str = `The Number that is defined in the variable

is five=${a}`; // Inserts 5, multiline string

const res = (a === 6 ? truthy : falsy) //Ternary operator

const b = (a != falsy) && a * 2 //Guard operator

const c = (a / 0) || 'Cannot resolve' //Default operator

If accessed the object's property that doesn't exist it will return undefined value. To delete propery use function delete obj.property.

Object property can be function as well, that can be defined by using shorthand method as well.

const product = {

calc: function calcPrice() { return 100; } };

const product = { calc() { return 100; } }; //Shorthand method

const price = product.calc();

Using destructuring as a shortcut to assign object's property to the variable of the same name. If property and variable have the same name, by creating an object can be used shorthand property shortcut

const message = objectPostman.message;

const { message } = objectPostman; // Destructuring

const { message, post } = objectPostman; // More as well

const objectPost2 = { message }; // Shorthand property

TheJSON built-in object can convert JS object to string and vice versa.

const strJSON = JSON.stringify(product);

product = JSON.parse(strJSON);

Using localStorage built-in object to save and load state between browser refresh. It will survive Ctrl+F5, but after clearing cookies from browser setting it will reset value

let visits = localStorage.getItem('visits') || '0'

visits = Number(visits); //Convert to number

visits++;

localStorage.setItem('visits', visits);

alert(visits);

localStorage.removeItem('visits'); //removing item

The DOM built-in object is the objects that models (represents) the web page. It combines JS and HTML together and provides with full control of the web within JavaScript. The method querySelector() lets us get any element of the page and put it inside JS, returning the first one if there are more. If element is found, it return JS object (accessing value returns string), otherwise it returns null. Unlike innerHTML, innerText will trim inner text. For input element it is used value property.

document.body.innerHTML = 'New content';

document.title = 'New title';

document.querySelector('button') //<button>Caption</button>

document.querySelector('button').innerHTML //Caption

document.querySelector('button').innerHTML='New'//New

document.querySelector('button').innerText // Trim

str = document.querySelector('input').value // <input>

num = Number(str) //convert to do math operations

str = String(num) //converts number to string

To dynamically set image, passed as variable imageName:

const element = document.querySelector('img');

element.innerHTML = `<img src="images/${imageName}.png>`

To access class list and modify class content in JS:

const btn = document.querySelector('button');

btn.classList.add('new-class');

btn.classList.remove('not-needed-class');

The window built-in object represents the browser.

window.document // DOM root object

window.console // built-in console objects

window.alert // popup

Object event and event listeners are used for event handling

Using property key of the object event can be ckech if particular key pressed

<input onkeydown="if(event.key === 'Enter') handleEnter();

else handleOtherEvents(event);"

Playwright Test Automation with JavaScript (3)

Sunday, 01.09.2024

A test runner with Jest-like assertions @playwright/test is developed and maintained by the Playwright team that is built on top of the Playwright API. This test runner is tightly integrated with Playwright and is specifically designed for end-to-end testing. It has capabilities like browser-specific tests, parallel test execution, rich browser context options, snapshot testing, automatic retries and many more.

To setup automation test environment:

------------------------------------------

1. Node.js: This is essential as Playwright is a Node.js library.

2. Playwright Framework: They need to install Playwright using npm.

3. Your Code: Provide all the necessary scripts and files.

4. VSCode (Optional): If they need to edit or debug the code.

5. Run: npm init playwright

6. Run: npm install playwright

7. Run: npm install @playwright/test

8. Configure Playwright: Set up the Playwright configuration file.

9. Run Tests: Use the appropriate npm scripts to execute the tests.

Besides a.m. core dependencies, if your project uses additional libraries or tools, make sure to include them in your package.json file.

To compare locators, their handles have to be evaluated and compared:

const handle1 = await loc.elementHandle();

const allHandles = await collLocs.elementHandles();

for (let i = 0; i < allHandles.length; i++) {

const handle = allHandles[i];

// Pass both handles wrapped in an object to eval

const isSame = await loc.page().evaluate(

({ el1, el2 }) => el1 === el2,

{ el1: handle1, el2: handle } ); }

Split string by defined delimiter returns string array

let arrURL = server.split('//'); // https://site.com

let strProtocol = arrURL[0] // gets "https:"

Waiting for locator to be visible using state (timeout is optional) or assertion. In particular, assertion to be 1 locator exactly

loc.waitFor({ state: 'visible', timeout: 5000 });

await expect(loc).toBeVisible();

await expect(loc).toHaveCount(1);

Locator returned from locator() method on page or other locator (DOM subtree search) will be valid object, if not found it will be locator that points to nothing with count() == 0, and locator.nth(n) will not raise an error if n is out of range

To wait for an element to be updated on the page with new value:

const selT = 'p.class1';

// Poll every 500ms to log the text content

for (let i = 0; i < 20; i++) { // timeout = 10 s

const currentDisplay = await this.page.textContent(selT);

if( currentDisplay == "Expected" ) //text updated

break;

await this.page.waitForTimeout(500); } // Wait

To wait for a page with specific URL and check it

page.waitForFunction(() => window.location.href.includes('S'));

page.url().includes('PartOfURL')

Traversing the DOM tree

Accessing parent element is possible for one or more levels

locParent = locChild.locator("..")

locGranpa = locChild.locator("../..")

Get the next sibling and list of all following siblings

locNextSib = locChild.locator('xpath=following-sibling::*[1]')

locAllFlwSibs = locChild.locator('xpath=following-sibling::*')

Checking if root node is reached while searching level-up

const tg = await loc.evaluate(el => el.tagName.toLowerCase());

if (tg === 'html')

Playwright Reporting

To implement custom report using CustomReporter class:

// custom-reporter.js

class CustomReporter {

async renameReportFile(oldPath, newPath) {

const fs = require('fs').promises;

try {

await fs.rename(oldPath, newPath);

console.log(`Renamed from ${oldPath} to ${newPath}`);

} catch (error) {

console.error(`Error file: ${error.message}`); }

}

async onEnd(result) {

console.log('Updating Report file name...');

await this.renameReportFile("old/index.html", newPath());

console.log(`Status: ${result.status}`);

console.log(`Started at: ${result.startTime}`);

console.log(`Ended at: ${result.endTime}`);

console.log(`Total duration: ${result.duration}ms`);

}

}

module.exports = CustomReporter;

// playwright.config.js

module.exports = defineConfig({ ... reporter: [

['html', { outputFolder: 'test-results', open: 'never' }],

['./tests/custom-reporter.js'] ],

Playwright Test Automation with JavaScript (2)

Sunday, 25.08.2024

Playwright provides a set of APIs that allow developers and testers to write test scripts to automate web applications across different browsers, such as Chromium, Firefox, and WebKit. It supports multiple programming languages like Java, TypeScript, Python, C# and of course - JavaScript. To setup environment, the first step is to install Node.js - an open-source, cross-platform, back-end JavaScript runtime environment that runs on the V8 engine and executes JavaScript code outside a web browser.

The command npm init playwright installs Playwright locally. It installs @playwright/test package (the Playwright test library) into your project's node_modules directory as a local dependency. The Playwright CLI is available within the project scope but not system-wide. To check Playwright version it shoud be run npx playwright --version

To wait until particular element is visible, or if it is attached to the DOM:

await locator.waitFor({ state: 'visible'})

await locator.waitFor({ state: 'attached'})

After option in combo box is selected, or datetime from Datetimepicker connected to input element is chosen, current content (selected option or datetime) is extracted using inputValue method.

locInput = await page.locator('input#ID')

extractedValue = locInput.inputValue();

await expect(extractedValue).toContain('textToContain')

await expect(locInput).toHaveValue('textToContain')

locLabel = await page.locator('label#ID2')

await expect(locLabel).toContainText('textToContain')

The methods page.locator can return one, many or none locators. To ensure it found element tried to locate it can be used count() method. If it returns many locators, it cannot be iterated through as an array, but using particular locator methods:

locElements = await page.locator('#ID')

locElements.count() !=0 //ensure returned at least 1 locator

//if returned many, loop through array of locators this way:

const n = await locElements.count();

for(let i=0; i<n; i++)

await itemText = locElements.nth(i).textContent();

To locate an element by its text content using exact or partial match:

page.locator('tag.class:text("Partial match")')

page.locator('tag.class:text-is("Exact match")')

To check if an element contains particular class

//Extracting the value of the 'class' attribute

classAttValue = locElement.getAttribute('class')

classAttValue.includes('required-class')

To locate element by its class and text content using XPATH

sel = 'xpath=//span[contains(@class,"c1") and text()="Abc"]'

locElement = page.locator(sel);

Searching for particular text on the page using backticks, scroll page up and down using keyboard, log array as table using console.table and highlighting particular element

page.locator(`text="${strTextToFind}"`)

await page.keyboard.press("PageDown")

console.table(arrToShow)

locator.highlight()

Global login - Re-use state & Re-use Authentication

There are 3 main actions that have to be performed on browser, context and page objects.

await context.storageState({path: 'LoginAuth.json'})

c2 =await browser.newContext({storageState: 'LoginAuth.json'})

page = await c2.newPage();

await page.goto("https://site.domain.com/LoginStartPage");

STEP 1 - the first step is to create Global setup file, containing globalSetup() function which will run once before all tests. There will be authentication code in that file and using Playwright storage state capability the state of application after logging in will be stored. The function has to be exported to be used from test function.

//global-setup.js

async function globalSetup(){

const browser = await chromium.launch({headless: false,

executablePath: 'C:\\Program Files (x86)\\Microsoft\\Edge\\Application\\msedge.exe'

});

const context = await browser.newContext();

const page = await context.newPage();

await doLogin(); //login code

//Save the state of the web page - we are logged in

await context.storageState({path: "./LoginAuth.json"});

await browser.close(); //cleanup

}

export default globalSetup;

STEP 2 - update playwright.config.js to use global-setup file, this line is to be added to the level of timeout parameter

globalSetup: "./global-setup",

STEP 3 - in order to use stored state that we have logged into, it needs to be added line in use section of playwright.config.js.

storageState: "./LoginAuth.json",

STEP 4 - in order to re-use stored state from JSON file in client code remove the line from config file and load stored settings from JSON file in the client code

//storageState: "./LoginAuth.json",

c2 =await browser.newContext({storageState: 'LoginAuth.json'})

page = await c2.newPage();

await page.goto("https://site.domain.com/LoginStartPage");

STEP 5 - some particular test can be configured either to use specified JSON file other that defined in config (before function implementation), or to clear cookies and perform login action (inside function body)

test.use({ storageState: "./ParticularAuth.json"}); //Option 1

test("Pw test", async ({ page, context })){

await context.clearCookies(); //Option 2 - clearing cookies

JavaScript Basics (1)

Sunday, 28.07.2024

There are 3 layers of standard web technologies: HTML, CSS and JavaScript. HTML is the markup language that we use to structure and give meaning to our web content, for example defining paragraphs, headings, and data tables, or embedding images and videos in the page. CSS is a language of style rules that we use to apply styling to our HTML content, for example setting background colors and fonts, and laying out our content in multiple columns. JavaScript is a scripting language that enables you to create dynamically updating content, control multimedia, animate images, and many other things.

Declaring variable or constant and get its type:

var a = 5

let b = 6

const c = 7

console.log(typeof(a))

Declaring array, access its elements:

var marks = Array(4) //4 elements, empty

var marks = new Array(6,7,8,9) //one way

var marks = [6,7,8,9] //another way

console.log(marks[0]) //access 1st element - Returns 6

Iterating array elements:

let i = 0;

for(let element of marks)

console.log("Element Nr. ", i++, "=", element)

Append, delete, insert, get index of particular element, check presence of particular element:

marks.push(10) //Added to end 10 [6,7,8,9,10]

marks.pop() //Deleted last [6,7,8,9]

marks.unshift(5) //Insert in the beginning [5,6,7,8,9]

marks.indexOf(7) //Returns 2 - index of 7

marks.includes(99) //Returns false - 99 not present in Array

Slice includes first index, excludes last index. Length,

subArr = marks.slice(2,4) //Returns [7,8]

marks.length //Returns 5 = array length

Reduce, filter and map.

//Anonymous function, 0 is initial value, marks[5,6,7,8,9]

let total=marks.reduce((sum,mark)=>sum+mark,0) //Returns 35

let evens=marks.filter(element=>element%2==0) //Returns [6,8]

let dbl=marks.map(element=>element*2) //Rets. [10,12,14,16,18]

Sorting an array

arr.sort() //for string array ASC

arr.reverse() //Reversing an array

arr.sort((a,b)=>a-b) //number array ASC

arr.sort((a,b)=>b-a) //number array DESC

Class declaration, exporting and importing

class MyClass

{

constructor(property_value)

{

this.my_propery = property_value;

}

}

module.exports = {MyClass}

const {MyClass} = require('./path/to/class')

Global variable declaration, exporting and importing

export let x1 = dataSet.sys;

import { x1 } from '../path/declared.js';

Creating and calling asynchronous test function

test('First Playwright test', async({ browser }) => {

const context = await browser.newContext();

const page = await context.newPage();

await page.goto("www.google.com");

});

Iterating through JavaScript object (key-value pair collection)

Use for...of for arrays when you want the values

const keysOfObject = Object.keys(object1)

for(let key of keysOfObject)

const itemValue = object1[key]

Use for...in for objects when you need the property names (keys)

for (let key in object1) {

const itemValue = object1[key] }

Working with Date object

const date1 = new Date() //current datetime

let date2 = date1; //by ref =>pointer

let date3 = new Date(date1) //by value =>copy

let date4 = new Date(2024,11,5,10,25); //11=Dec, 0-based month

date1.toISOString(); //shorter string

The Math.floor() static method always rounds down and returns the largest integer less than or equal to a given number. Math.round() rounds number, and Math.random() generates random number between 0 and 1;

const a = 4.5

let b = Math.floor(a) //b=4

let c = Math.round(a) //c=5

let r = Math.random() //between 0 and 1

Playwright Test Automation with JavaScript (1)

Sunday, 23.06.2024

Playwright provides a set of APIs that allow developers and testers to write test scripts to automate web applications across different browsers, such as Chromium, Firefox, and WebKit. It supports multiple programming languages like Java, TypeScript, Python, C# and of course - JavaScript. To setup environment, first is to be installed node.js and after that to perform the following steps:

Selecting page object using CSS selector by ID, class, attribute and text:

Locator = page.locator('#ID')

Locator = page.locator('tag#ID')

Locator = page.locator('.classname')

Locator = page.locator('tag.classname')

Locator = page.locator("[attrib='value']")

Locator = page.locator("[attrib*='value']") //using RegEx

Locator = page.locator(':text("Partial")')

Locator = page.locator(':text-is("Full text")')

This will return element, on which will be perfomed actions - fill(), click() etc.

Extracting text present in the element (grab text). How long will Playwright wait for element to come to DOM is defined in config file, { timeout = 30 * 1000 }

const txtExtracted = Locator.textContent()

The following is defined in the config file:

const config = {

testDir: './tests', //run all tests in this dir

timeout: 30 * 1000, //timeout for each test

expect: {

timeout: 5000 //timeout for each assertion

},

Assertion - check if defined text is present in element (->Doc: Assertions). How long - defined in config file { expect: { timeout: 5000 } ...}

expect(Locator.toContainText('Part of text'))

Traverse from parent to child element - Parent element has class parentclass, and child element has tag b

<div abc class="parentclass" ...

<b xyz ...

page.locator('.parentclass b')

When returned list of elements (->Doc: Auto-waiting) - Playwright will wait until locator is attached to the DOM, few ways to access particular list element.

It can also be extracted text present in all returned locators and assigned to array, but Playwright won't wait until locators are attached to the DOM:

LocatorsList = page.locator('.class1') //more elements

LocatorsList.nth(0)

LocatorsList.first()

LocatorsList.last()

const arrReturnedElementsText = LocatorsList.allTextContents()

//returns [el1, el2, el3]

To wait until locators are attached to DOM (->Doc: waitForLoadState) it is required to add an additional synchronization step

//Option 1 - (discouraged)

await page.waitForLoadState('networkidle')

//Option 2

LocatorsList.first().waitFor()

Handling static DDL, Radio button, Check box

const ddl = page.locator("select.classname") //Locate DDL

await ddl.selectOption("OptionValuePartOfText") //select opt.

const rb1 = page.locator(".rbc").first() //1st RB option

await rb1.click()

await expect(rb1.toBeChecked()) //assertion

await rb1.isChecked() //returns bool value

await cb1.click()

await expect(cb1.toBeChecked())

await cb1.uncheck()

expect( await cb1.isChecked() ).toBeFalsy() //action inside

Opening Playwright Inspector to see UI actions

await page.pause()

Check if there is particular attribute and value

await expect(Locator).toHaveAttribute("att","val1")

Child windows handling with Promise. There are 3 stages of Promise: pending, rejected, fulfilled.

const newPage = await Promise.all([

context.waitForEvent('page'),

docLink.click(),

])

Pytest & Playwright Web Automation Testing

Sunday, 19.05.2024

Pytest is a popular Python testing framework that offers a simple and flexible way to write and run tests. Playwright is a modern, fast and reliable browser automation tool from Microsoft that enables testing and automation across all modern browsers including Chromium, Firefox and Webkit. In this article series, there will be shown how to blend Playwright capabilities into the Pytest framework with the use of pytest-playwright plugin.

Python features covered in this article

---------------------------------------------

-BrowserType.launch(headless, slowmo)

-Browser.new_page()

-Page.goto()

-Page.get_by_role('link', name)

-Locator.click()

-Page.url

-Browser.close()

-Page.get_by_label()

-Page.get_by_placeholder()

-Page.get_by_text()

-Page.get_by_alt_text()

-Page.get_by_title()

-Page.locator - CSS selectors, Xpath locators

-Locator.filter(has_text)

-Locator.filter(has)

Getting started - Environment setup

Checking Python version, creating and activating virtual environment, installing Playwright, updating pip, checking playwright version:

PS C:\Users\User\PyTestPW> python --version

Python 3.11.2

PS C:\Users\User\PyTestPW> python -m venv venv

PS C:\Users\User\PyTestPW> .\venv\Scripts\activate

(venv) PS C:\Users\User\PyTestPW>

(venv) PS C:\Users\User\PyTestPW> pip install playwright

Successfully installed greenlet-3.0.3 playwright-1.44.0 pyee-11.1.0 typing-extensions-4.11.0

[notice] A new release of pip available: 22.3.1 -> 24.0

(venv) PS C:\Users\User\PyTestPW> python.exe -m pip install --upgrade pip

Successfully installed pip-24.0

(venv) PS C:\Users\User\PyTestPW> playwright --version

Version 1.44.0

(venv) PS C:\Users\User\PyTestPW> playwright install

Downloading Chromium 125.0.6422.26 ... 100%

Downloading Firefox 125.0.1 ... 100%

Downloading Webkit 17.4 ... 100%

Creating simple test script

The first script consists of the following steps:

The following code is implemented in new file app.py :

# FILE: app.py

from playwright.sync_api import sync_playwright

with sync_playwright() as playwright:

# Select Chromiun as browser type on Playwright object

bws_type=playwright.chromium

# Launch a browser using BrowserType object

browser = bws_type.launch()

#Create a new page using Browser object

page = browser.new_page()

#Visit the Playwright website using Page object

page.goto("https://playwright.dev/python/")

#Locate a link element with "Docs" text

docs_btn=page.get_by_role('link', name="Docs")

#Clink on link using Locator object

docs_btn.click()

#Get the URL from Page object

print("Docs: ", page.url)

#Closing method on Browser object

browser.close()By default headless argument in the launch method is True (no UI shown), and by setting it to False the browser UI will be shown. To see the script execution in slow motion it can be used slowmo argument (slowmo=500 means 500 times slower execution) in the same launch method of the BrowserType object

To locate link to be clicked, in inspection mode when positioning mouse on the link there will be shown HTML section of the particular element:

Link is located using get_by_role() method of page object, and clicked using click() method of the returned object.

Running our script from Terminal:

(venv) PS C:\Users\User\PyTestPW> python app.py

Docs: https://playwright.dev/python/docs/introSelecting Web elements in Playwright

In the Method get_by_role() the first argument is object type - link, button, heading, radio, checkbox..., and the 2nd argument is the object name. Method is called on Page object and returned value is Locator object

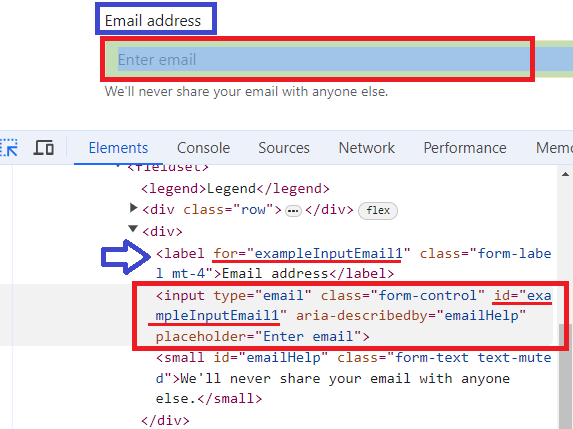

Method get_by_label() can be used for input field that has a label element associated, as in example below

If input field has no label associated, it can be located using get_by_placeholder() method. Locating element using inner text locator (caption of the button, paragraph containing searched text, etc...) is done using method get_by_text(). Locating image by alt text is done using get_by_alt_text() method. For elements that have title attribute specified and unique, it can be used get_by_title() method for locating the element.

All of the a.m. methods return Locator object.

CSS selectors

Locating a web element using CSS selector is done using Page.locator method (passing "css=" is optional). When locating using tag name, it is done by passing it as the first argument, locating using class name class is separated from tag name by dot and locating using id it is separated from tag name by hash. Lastly, locating using attribute is done using tag name and square bracket notation, and if an attribute has a value assigned the value is included within square brackets.

page.locator("css=h1") #Tag

page.locator("button.btn_class") #class

page.locator("button#btn_id") #id

page.locator("input[readonly]") #attribute

page.locator("input[value='val1']") #att with value

Web element can be also located using CSS pseudo class. Locating by text pseudo class matching text is loose, and for the strict match it is used text-is pseudo class selector. To locate element that is visible it is used visible pseudo class. To select element based on its position it is used nth-match pseudo class selector.

page.locator("h1:text('Nav')") # loose selection

page.locator("h1:text-is('Nav')") # strict selection

page.locator("div.dropdown-menu:visible")

page.locator(":nth-match(button.btn-primary, 4)")

Xpath Locators

Using XML querying language specifically created to select web elements is one more way to locate elements on the web page (passing "xpath=" is optional). Absolute path starts with slash(/) and relative path starts with double slash (//). It can be selected object based on attribute and value as well. Using Xpath functions provides more flexibility. Exact match of the text inside of element is located using text() function, and loose match is done using contains() function. That function can be used for other attributes as well, such as class.

page.locator("xpath=/html/head/title") # Absolute path

page.locator("//h1") # Relative path

page.locator("//h1[@id='navbars']") # Attribute and value

page.locator("//h1[ text()='Heading 1']") # Exact match

page.locator("//h1[ contains(text(), 'Head')]") # Loose match

page.locator("//button[ contains(@class, 'btn-class1')]") # class attribute

Other locators

If there are more elements of the same type with the same name, it is used locator(nth) function and passed 0-based index of the particular element we want to locate. This function can be appended to CSS selector as well, since it also returns locator object. To locate parent element it used double-dot (..). Selecting element by id or by its visibility can be also accomplished using locator method. Using filter() method it can be selected element either based on its inner text with has_text argument or based on containment of another element with has argument

page.get_by_role("button", name="Primary").locator("nth=0")

page.locator("button").locator("nth=5")

page.get_by_label("Email address").locator("..") # Parent

page.locator("id=btnGroupDrop1") # id

page.locator("div.ddmenu").locator("visible=true") # visible

page.get_by_role("heading").filter(has_text="Heading")

parent_loc = page.locator("div.form-group") # containment

parent_loc.filter(has=page.get_by_label("Password"))

Playwright basic features

Sunday, 07.04.2024

Test automation has become significant in Software Development Life Cycle (SDLC) with the rising adoption of test automation in Agile teams. As the scope increases, new tools for test automation are emerging in the market. Test automation frameworks are a set of rules and corresponding tools that are used for building test cases, designed to help engineering functions work more efficiently. The general rules for automation frameworks include coding standards that you can avoid manually entering, test data handling techniques and benefits, accessible storage for the derived test data results, object repositories, and additional information that might be utilized to run the tests suitably.

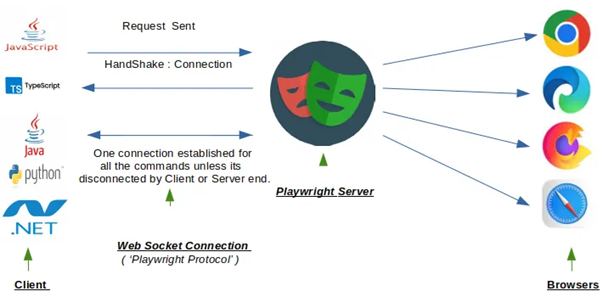

Playwright is a powerful testing tool that provides reliable end-to-end testing and cross browser testing for modern web applications. Built by Microsoft, Playwright is a Node.js library that, with a single API, automates modern rendering engines including Chromium family (Chrome, MS Edge, Opera), Firefox, and WebKit. These APIs can be used by developers writing JavaScript code to create new browser pages, navigate to URLs and then interact with elements on a page. In addition, since Microsoft Edge is built on the open-source Chromium web platform, Playwright can also automate Microsoft Edge. Playwright launches a headless browser by default. The command line is the only way to use a headless browser, as it does not display a UI. Playwright also supports running full Microsoft Edge (with UI).

Playwright supports multiple languages that share the same underlying implementation.

-JavaScript / TypeScript

-Python

-Java

-C# / .NET

All core features for automating the browser are supported in all languages, while testing ecosystem integration is different.

Playwright is supported on multiple platforms: Windows, WSL, Linux, MacOS, Electron (experimental), Mobile Web (Chrome for Android, Mobile Safari). Since its release in Jan 2020 Playwright has a steady increase in usage and popularity.

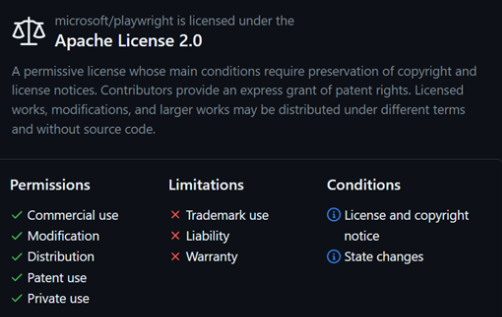

Playwright is Open Source - it comes with Apache 2.0 License, so all the features are free for commercial use and free to modify.

Architecture Design (Communication)

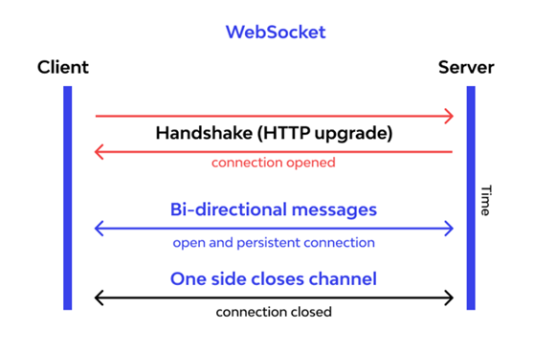

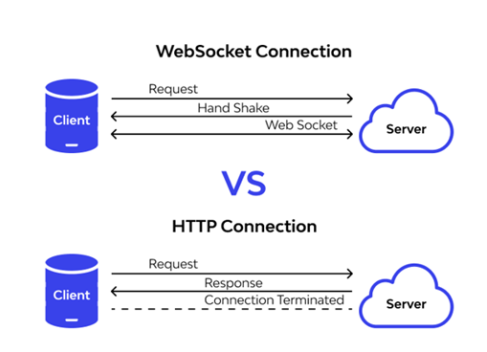

Playwright uses ChromeDevTool (CDP) – WebSocket protocol to communicate with the Chrome browser’s rendering engine. WebSocket is a stateful protocol.

Playwright will communicate all requests through a single web socket connection that remains active until all tests have been completed. This design might reduce points of failure during test execution and makes Playwright a more stable, speedy test execution and reduces flakiness.

Since Playwright protocol works on web socket connection, it means once the request is sent from the client end, there is a handshake connection established between client and playwright server. That is a single connection that stays open between code ( the commands written like click , fill , select ) and the browser itself. This connection remains open throughout the testing session. It can be closed by the client and server itself.

Further Benefits of using Playwright

Lambda functions and map()

Sunday, 24.03.2024

Function map() allows us to map a function to a python iterable such as a list or tuple. Function passed can be built-in or user-defined, and since it is not a function calling, just passing an adress, it's to be used a function name with no parenthesis(). Lambda function is an anonymous function defined in one line of code, and it is especially suitable for using in map() function. The code from all examples is stored on GitHub Repository in Jupiter Notebook format (.ipynb).

Python features covered in this article

---------------------------------------------

-map()

-list() for conversion to list

-lambda function

-get() function on dictionary

-tuple() for conversion to tuple

Function map()

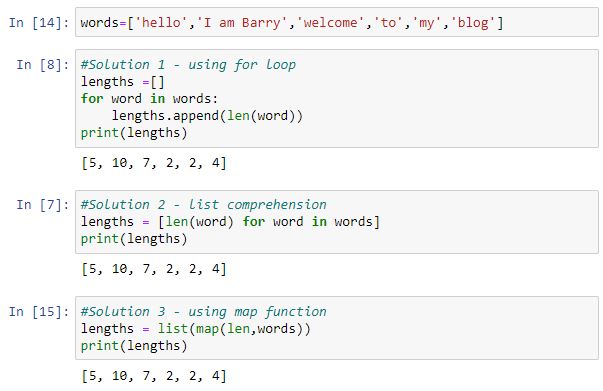

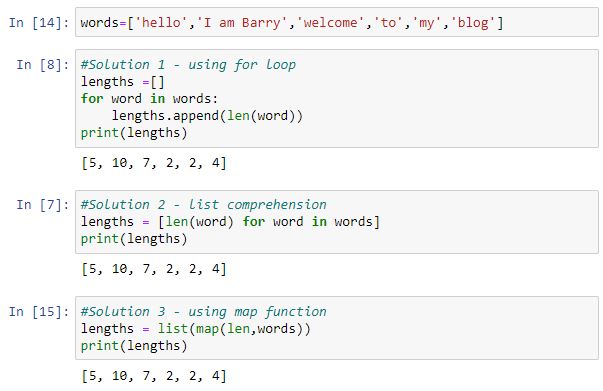

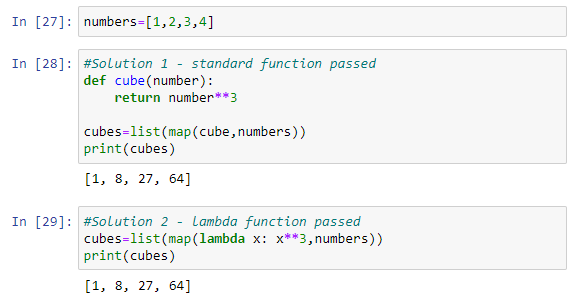

Map function helps us to be more explicit and intentional in code. Map function takes as arguments a function and an iterable on which elements passed function will be performed. It returns map object which can be converted to list using list() function or to tuple using tuple() function, as well as to many other types or classes. There are 3 examples that shows using map() function compared to other possible solutions in creating a new list that contains length of elements in the list:

Using lambda function in map

Using lambda function is a practical way for defining a map function, because it requires less lines of code and reduces dependency (user-defined function has to be defined previously if using a standard way). There are 2 examples illustratiing both ways, function passed to map() calculates cube of each list element:

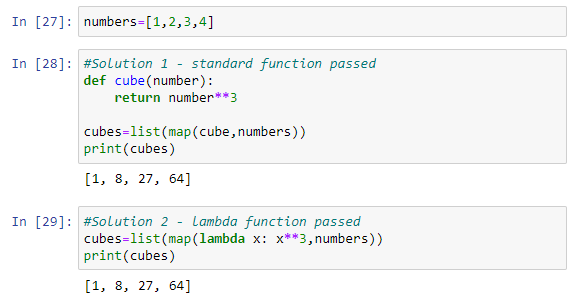

Applying map() to many lists, a dictionary and tuple

We can pass more iterables to map() functions, for instance two lists and define with a lambda function an operation to be performed on elements of each one. List can contatin dictionary elements, which can be safely accessed using get() function and passing 0 for the case that an element doesn't exist. Lambda function passed to map() function can return tuple as well. There are examples:

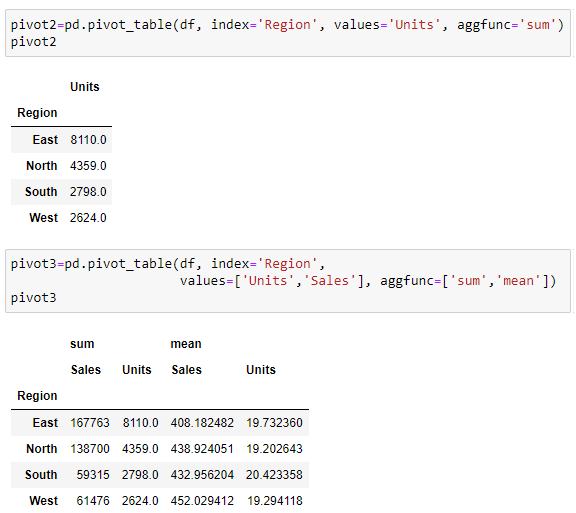

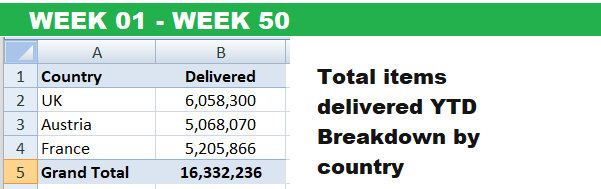

Creating Pivot tables in Python

Sunday, 25.02.2024

Pivot tables known from Excel (also known as Matrix tables from Power BI) can be created in Python as well. A pivot table is a powerful data analysis tool that allows us to summarize and aggregate data based on different dimensions. In Python, pivot tables can be created using the pandas library, which provides flexible and efficient tools for data manipulation and analysis.

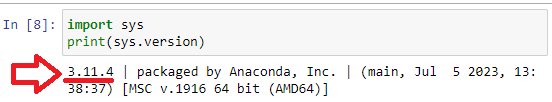

Python features covered in this article

---------------------------------------------

-pivot_table()

-sys.version

-pivot_table(values)

-pivot_table(agg_func)

-pivot_table(columns)

-pivot_table(fill_value)

-pivot_table(margins)

-pivot_table(margins_name)

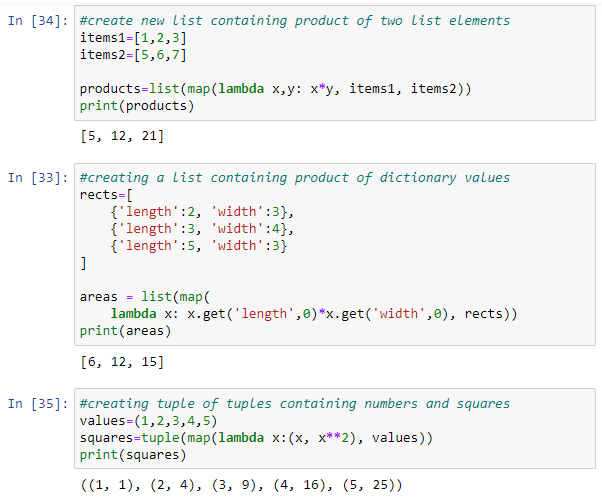

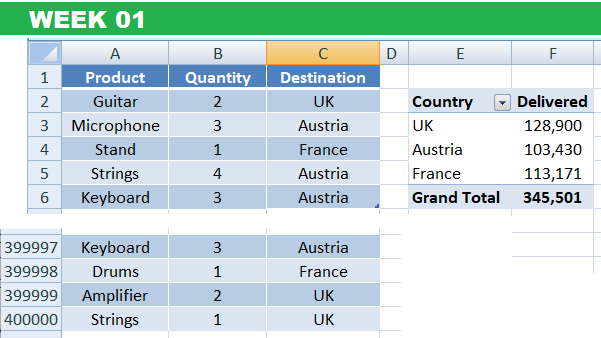

DataSet in Excel

is downloaded from GitHub and using head() function there are top 5 records shown.

The code used in this article is available as Jupiter Notebook on

GitHub repository

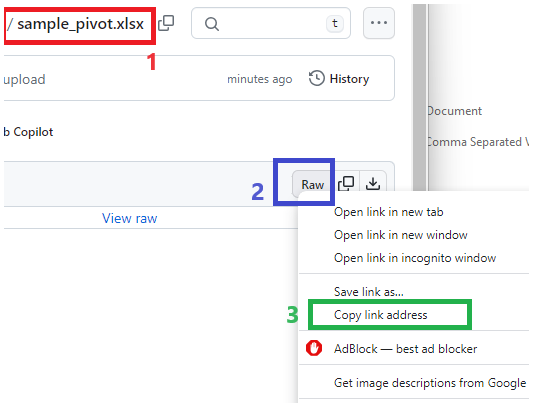

To ensure obtaining the right URL path from GitHub it has to be used Raw option. First click on the file(1) then on the Raw option(2) from the Right-Click menu select Copy link address option(3)

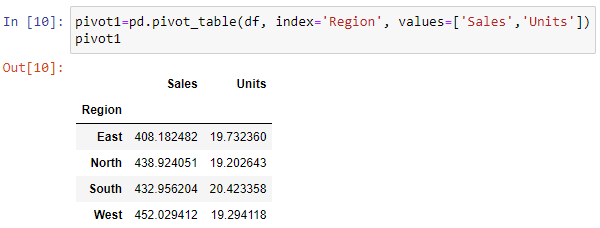

Let's create Pivot table using pivot_table() function that will show values of the columns Sales and Units with applied some aggregating function. There will be explicitly passed names of columns to be aggregated

Values shown in the table above are average (mean) values because 'mean' is the default aggregating operation in pivot_table() function.

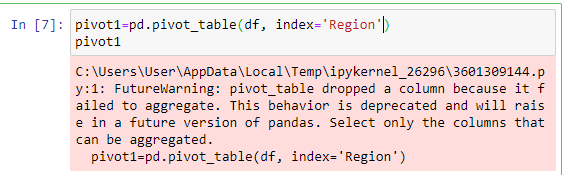

In previous versions of pandas there were automatically selected numerical columns that could be aggregated and dropped columns contatining other data types (strings, datetime...) for which it made no sense to aggregate. However, in the current version

it is still possible, but there is a warning that in future version it will not be possible anymore:

If we want to calculate another aggregate function, for instance sum function we can pass it as an agg_func argument to pivot_table function. Aggregating columns can be one or more, as well as aggregating functions:

To use autocomplete feature in Jupiter Notebook, it should be pressed TAB after dot:

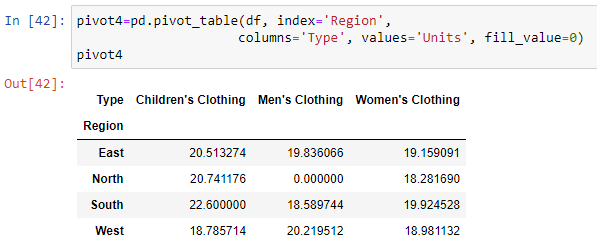

Breaking out data by region and also by type of sales in columns can be done using columns argument of pivot_table function. By using fill_value argument it can be filled empty cells in the DataFrame with specified values.

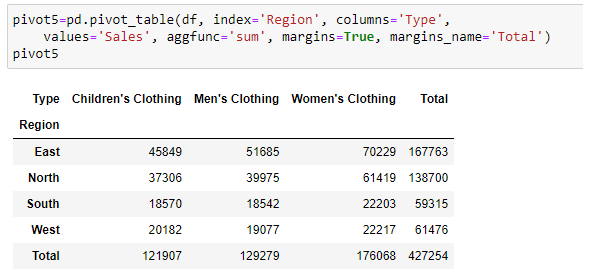

Showing totals can be done using margins argument of pivot_table function. Using margins_name it can be defined name of total column and row:

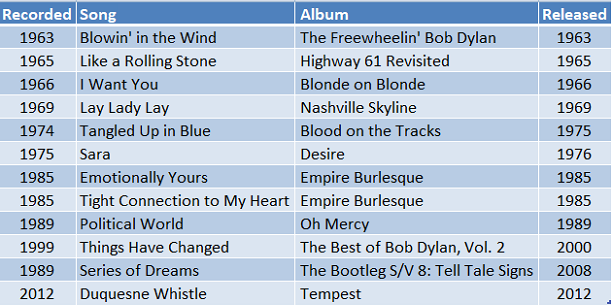

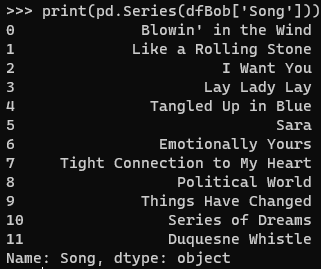

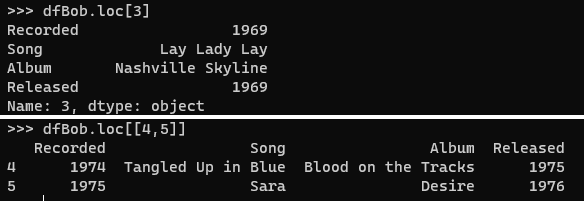

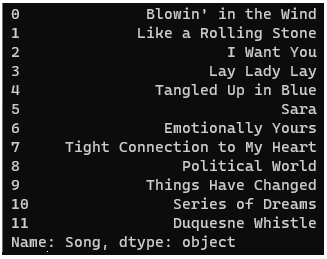

Exploring pandas Series

Sunday, 28.01.2024

In Data Anaysis process the Data exploration refers to the initial step, in which data analyst uses available feature of the tool or programming language to describe dataset characterizations, such as size, quantity, and accuracy, in order to better understand the nature of the data. Exploring data is just the first look, looking at it if it's going to be cleaned up doing the data cleaning process. Then it's going to be done the actual data analysis actually finding trends and patterns and then visualizing it in some way to find some kind of meaning or insight or value from that data.

The code used in this article is available on GitHub repository

Python features covered in this article

-----------------------------------------------

-DataFrame.dtypes

-Series.describe()

-Series.value_counts(normalize)

-Series.unique

-Series.nunique()

-crosstab

-%matplotlib inline

-Series.plot

-Series.value_counts().plot()

The data set that will be explored in this article is IMDB movies rating data set, that is available on GitHub repository

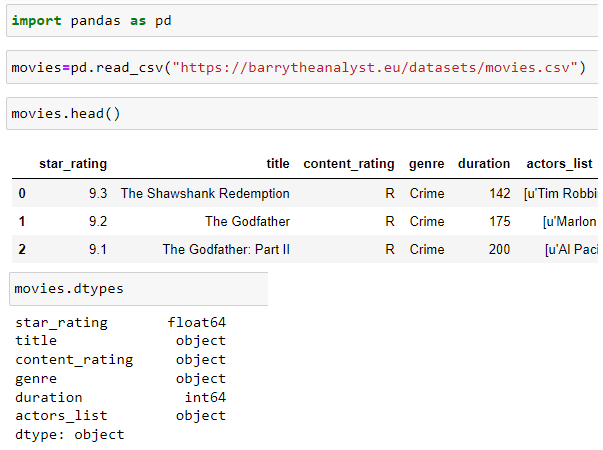

It will be loaded to DataFrame object and using DataFrame.dtypes first it will be explored column data types:

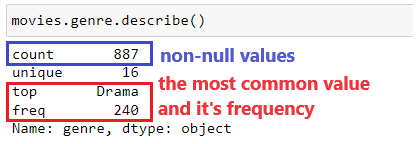

Exploring column [genre] can be started with method Series.describe() that will show some information about Series (column):

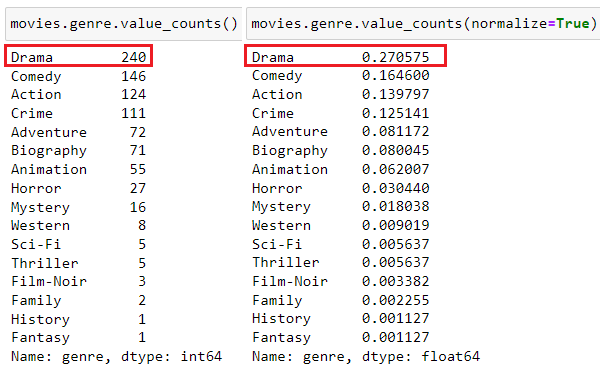

More detailed information will be shown using method Series.value_counts() with optional use of normalize parameter which will show percentages

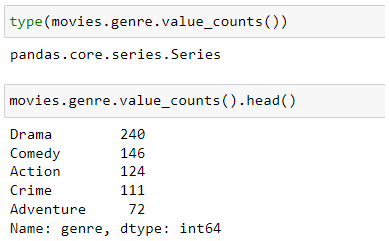

Type of object that value_counts() method returns is also Series, so all Series methods are available, for instance head():

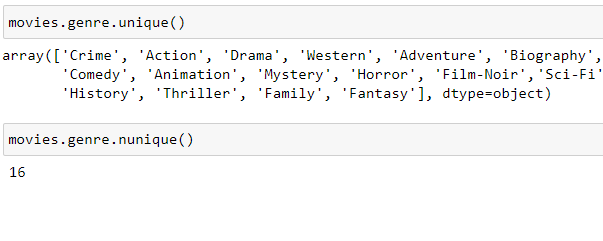

Using Series.unique() method there will be shown all uninque column values, and Series.nunique() method will return number of unique values.

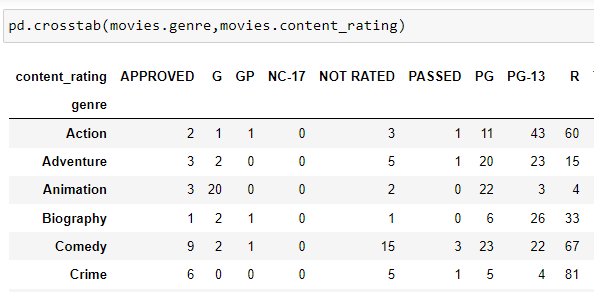

It can be performed cross-tabulation of the two different Series using crosstab() method. This will show one Series as column and another as rows and table will be filled with number of matching pairs - in this case showing how many genre has particular rating

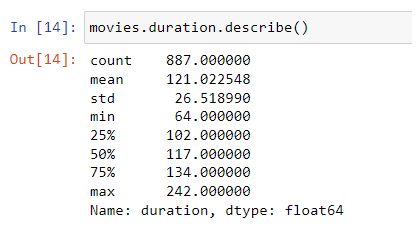

Method Series.describe() can also be applied to numeric column, and in that case it shows some statistics about it:

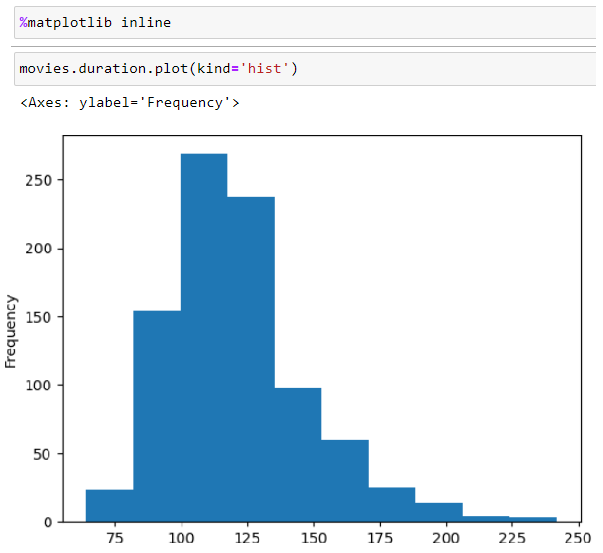

Data Visualization can be shown within Jupiter Notebook using %matplotlib inline command. the following example shows histogram of duration Series, that shows distribution of numerical variable:

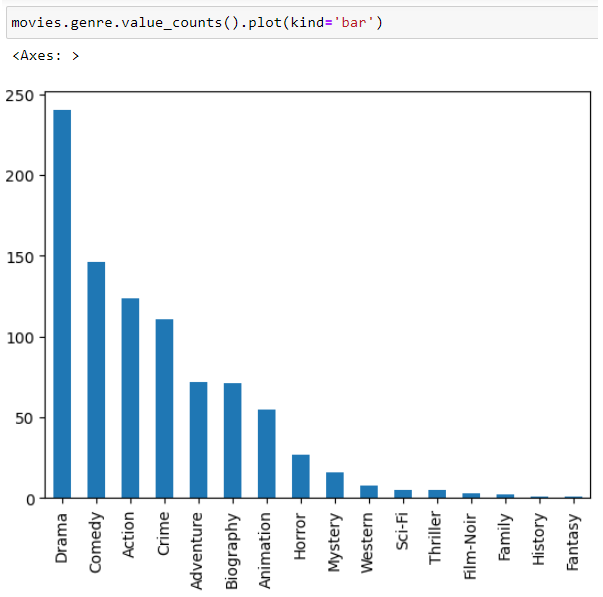

The last example shows bar chart of value_counts() applied to Series genre:

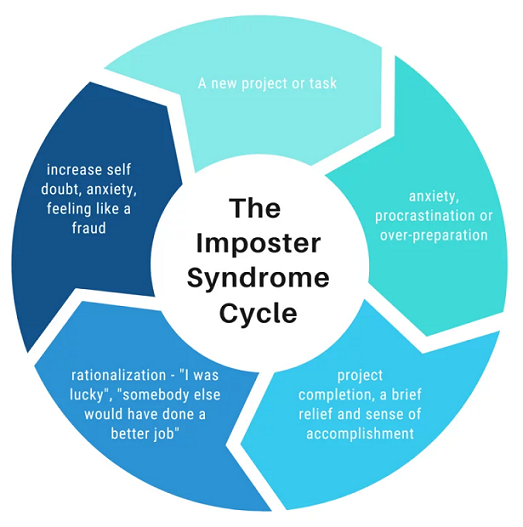

Is Data Analysis a Dying Career?

Sunday, 02.12.2023

Besides technical hard skills, a Data Analyst has to have also communication and social soft skills. This is important not only for the job - as the presentation of insights to stakeholders is its regular part - but also for communication about their job. As I seriously intend to be fully engaged as a professional in Data Analysis and stay into it long-term, I shared my plans a few days ago in a small talk with one colleague. He isn't in Data Business but the first thing he asked me is: Does it have a future, since AI can nowadays do so much of the tasks? Well, I told him that AI can help us a lot to be more efficient, more punctual and more productive. But that small conversation motivated me to research a little bit, asking myself: am I going to end up in a dead-end street? Starting to check some relevant facts & figures brought me back in 2012: Harvard Business Review (source: hbr.org) called The Data Science "the sexiest job in 21st century". It has been a hot topic for a decade, now the question is: Is Data Science dying?

NOTE: Terms "Data Scientist" and "Data Analyst" are commonly used interchangeably as well as in this article

Some people think (source: teamblind.com) that Data Science is no longer the sexiest career but it's becoming the most depressing career, since many companies are laying off Data Scientists becoming waste of money for them, and that the field is dying very fast. On the other had, there are headlines from 2022 (source: fortune.com) suggesting a different point of view:

So why are so many people

worried about job security in data science? In this worry coming out of nowhere? With recent news about coming

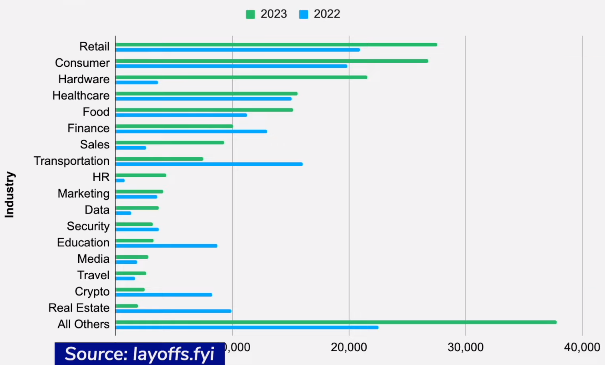

layoffs it's natural to be concerned. Comparing 2023 with 2022 accross many industries there are more layoffs in the current year in the most of them (source: layoffs.fyi):

Comparing to other technical roles (source: warntracker.com) layoffs of Data Scientists in Meta and Google are relatively rare, less than 5% in years 2022 and 2023. So, the data indicates that the perception of data scientists facing higher job loss risks doesn't align with reality, since Data Scientists have a relatively lower risk of layoffs compared to other roles considering the current trends in major tech companies.

Some Data Scientist reported (source: teamblind.com) that while others say the field is dying their own career keeps getting better, they keep earning more money and getting promoted. They firmly believe that Data Science is not shrinking but actually growing. They think that many companies still haven't figured out how to use data science effectively. Additionally they observe continuing trend of companies investing in data-driven products indicating that the demand for data science remains strong. Consequently they view data science as extremely relevant and highly sought-after career

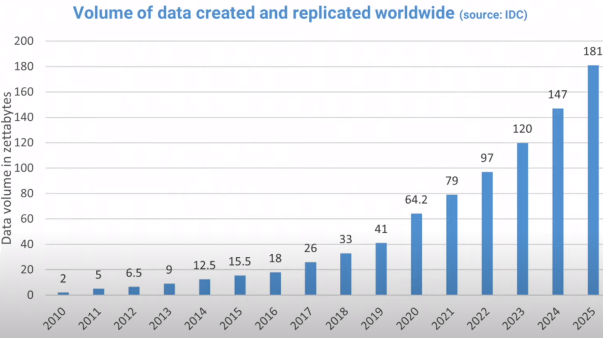

According to one study (source: statista.com) the amount of data in the world is expecting to reach 181 zettabytes by 2025 (1 zettabyte=10^21 bytes):

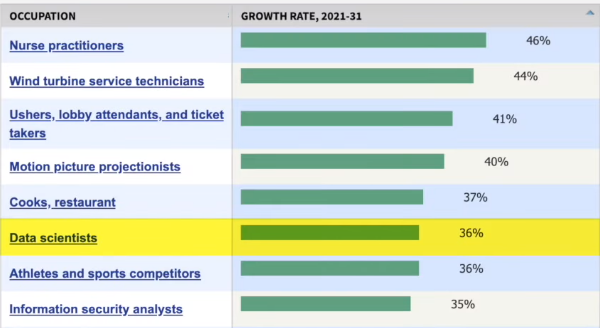

This tremendous growth has led to a high demand for data scientists and it even surpasses the rate at which colleagues and universities can train them. Information obtained from the U.S. Bureau of Labor Statistics (source: bls.gov) show that the number of jobs for data scientists is projected to increase by 36% from 2021 to 2031. making it one of the fastest growing occupations in the U.S:

The number of job opportunities for data scientists has increased significantly in recent years. Since 2016 there has been a staggering 480% growth in job openings for this occupation (source: fortune.com). Job postings on "indeed", a popular job search platform, have also seen since 2013 a notable rise of 256% percent (source: hiringlab.org). Also it is projected (source:bls.gov) that an average of 13,500 job openings for data

scientists will be available each year over the next decade as a current workforce retires and it needs to be replaced.

These figures highlight the strong demand for data scientists in the job market. In fact according to Glassdoor's list of the best jobs for 2022, Data Scientist is ranked as the third best occupation in the U.S. surpassing only by Enterprise Architects and Full Stack Engineer:

Considering the supply side of the story - workforce - many people are eager to enter this domain. In the recent announcement from the University of California at Berkeley (source: berkeley.edu) they are creating a new college for the first time in over 50 years. This new College of Computing, Data Science, and Society (CDSS) is born out of the growing demand for computing and data science skills.

Succeeding in Data Science is not only about technical proficiency. It's equally important to have a genuine passion for uncover insights from data, the curiosity to ask the right questions and the creativity to effectively communicate the findings in a manner that others can understand. Strong communication skills are highly valued in this field, as well as nurturing curiosity and being open to exploring new areas within Data Science.

Specifying rules using CONSTRAINTs

Sunday, 12.11.2023

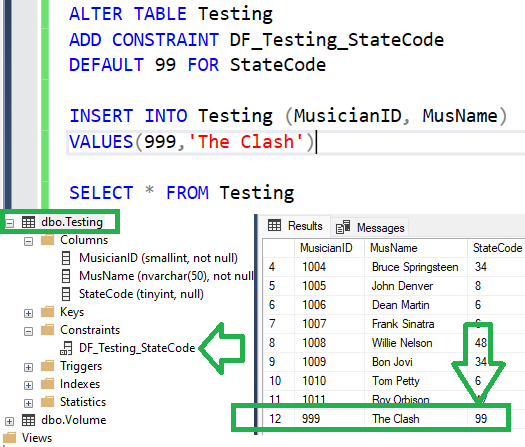

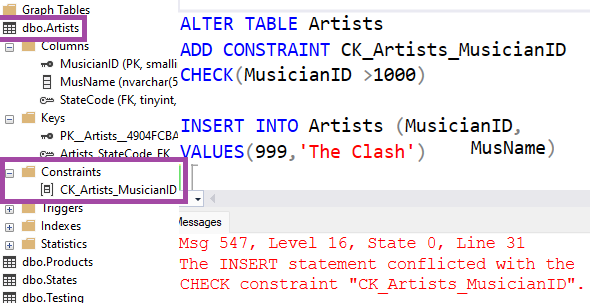

Foreign key is the field in the table which contains values from another table's primary key field. Foreign key is one of the available constraints in table definition and it enforces database integrity. There is also DEFAULT constraint - which will fill the field with specified value if not provided in INSERT INTO statement (if NULL value provided explicitly it will be inserted, not default value). There is CHECK constraint that returns boolean value - TRUE or FALSE, for NULL value it returns UNKNOWN.

SQL features covered in this article

---------------------------------------------

-FOREIGN KEY

-SELECT INTO

-DEFAULT CONSTRAINT

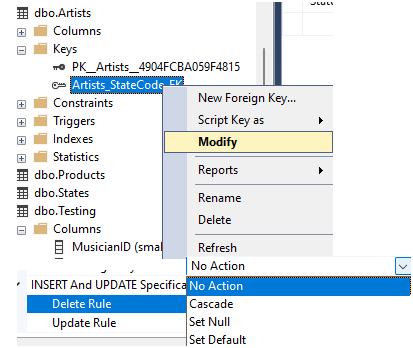

-CASCADING CONSTRAINT

-CHECK CONSTRAINT

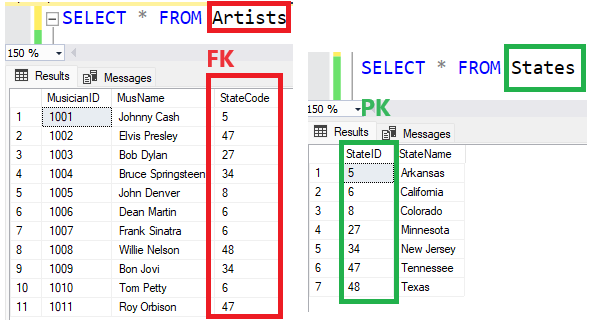

There are 2 tables in the database:

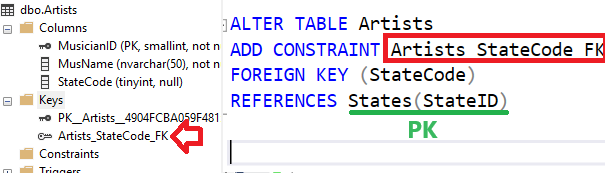

Adding FOREIGN KEY constraint will disable entering records in Artist table with value of the field State that are not in the PK field in State table:

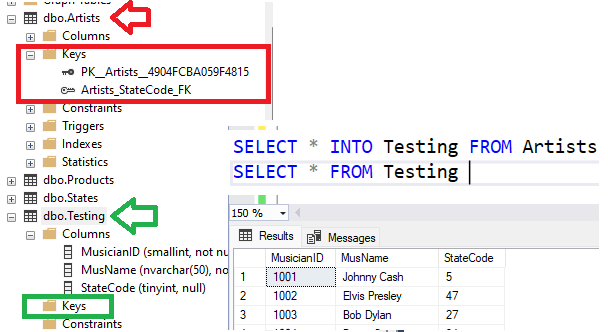

Copy table Artist to a new table for testing can be done using SELECT INTO statement. It wil copy data only, and neither PK nor FK constraints:

Create DEFAULT constraint will fill value if not provided. If provided NULL, field will be filled with NULL value, not the default value.